We Built AI That Can Act.

We Forgot to Build What Stops It.**

For years, we told ourselves a comforting story about AI.

If we documented it.

If we governed it.

If we explained it.

It would be safe.

That story is now collapsing.

Because the AI we are deploying today no longer just predicts.

It acts.

It reasons.

It plans.

It executes tools.

It coordinates with other agents.

And when it fails, it fails at speed.

The Fatal Assumption in Modern AI

Modern AI governance assumes something subtle—and dangerously wrong:

That safety can be decided before deployment.

That assumption worked when AI systems were static.

It fails completely when systems are:

Autonomous Persistent Self-directed Embedded in real-world workflows

Agentic AI does not fail once.

It fails over time, in interaction, under pressure.

Compliance Is Not Safety

A system can be:

Fully documented Perfectly classified Technically explainable

…and still cause harm.

Why?

Because compliance governs intent.

Safety governs behaviour.

And behaviour only reveals itself in motion.

The Missing Institution

In aviation, we do not ask pilots to certify their own aircraft.

In finance, we do not let banks audit their own risk models.

In medicine, we do not let drug companies approve their own trials.

Yet in AI, we do exactly that.

We build.

We test.

We deploy.

We declare ourselves “responsible”.

This is not maturity.

It is adolescence.

Agentic AI Changes the Question

The real question is no longer:

“Is the model accurate?”

It is:

Should this system be allowed to act without consent? Who intervenes when objectives drift? What happens when agents reinforce each other’s errors? Who carries responsibility when harm emerges slowly?

These are safety questions, not technical ones.

Why a Safety Control Plane Is Inevitable

Agentic AI forces a new reality:

Safety must be continuous Oversight must be independent Intervention must be real-time Impact must be human-centred

Anything less is theatre.

The future of AI will not be decided by who builds the most powerful models.

It will be decided by who builds the systems that keep them aligned, bounded, and humane.

Final Thought

We spent a decade teaching machines how to think.

The next decade will be about teaching ourselves how to stop them—when we must.

That is the work ahead.

For the last decade, enterprises have focused on two questions:

Can we build AI at scale? Can we govern it responsibly?

These questions made sense when AI systems were predictive, bounded, and tool-like.

They are dangerously insufficient in the age of agentic AI.

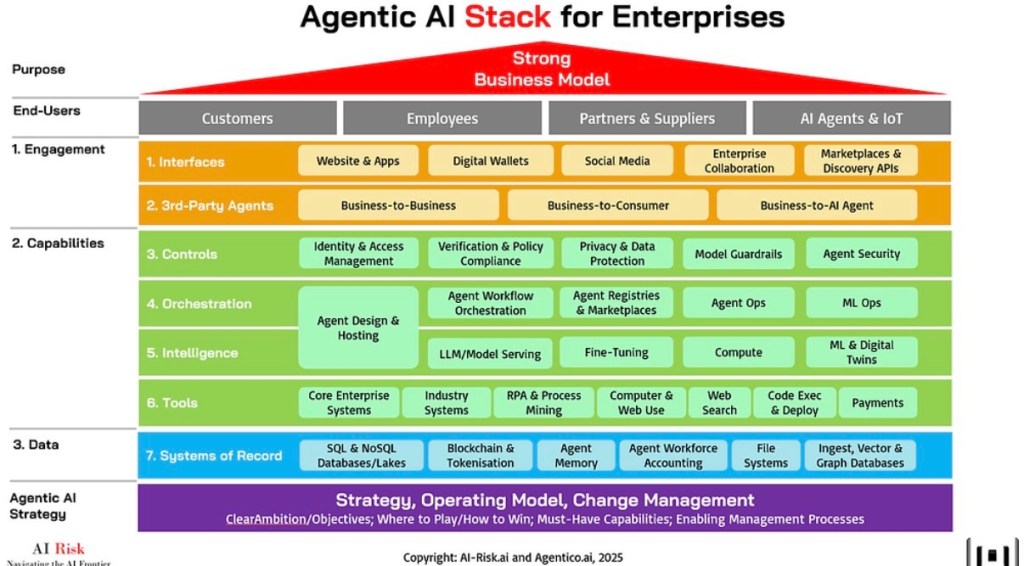

As AI systems move from models to actors—capable of reasoning, planning, executing tasks, and coordinating with other agents—we need a third, missing capability:

Independent, continuous AI safety and assurance across the full lifecycle.

This post introduces the AI Safety Stack—a layered, technical view of where today’s platforms stop, where risks accumulate, and why a new safety control plane is required.

As AI systems evolve from passive models into autonomous, agentic systems, traditional approaches to building and governing AI are no longer sufficient. What is required is a full-stack safety architecture that spans from infrastructure through to human and societal impact. This document presents a comprehensive AI Safety Stack, designed to be brand-agnostic, regulator-aligned, and future-proof.

1. Visual Overview of the AI Safety Stack

The AI Safety Stack is a layered architecture. Each layer addresses a distinct class of technical, operational, and societal risk. Safety is not achieved at any single layer; it emerges from coherent coverage across all layers.

————————————————–

L7: Human & Societal Impact

L6: Independent AI Assurance & Certification

L5: Runtime & Agentic Safety

L4: Enterprise AI Governance & Risk

L3: Model Governance & Explainability

L2: AI / ML / LLM Operations

L1: Data & Compute Foundation

————————————————–

The AI Safety Stack – Overview

┌──────────────────────────────────────────────┐

│ HUMAN & SOCIETAL IMPACT │

│ • Intent • Harm • Autonomy • Trust │

│ ▶ YOUR AI SAFETY PLATFORM │

├──────────────────────────────────────────────┤

│ INDEPENDENT AI ASSURANCE & CERTIFICATION │

│ • Red-teaming • Stress testing • Sign-off │

│ ▶ YOUR AI SAFETY PLATFORM │

├──────────────────────────────────────────────┤

│ RUNTIME & AGENTIC SAFETY │

│ • Agent behaviour • Drift • Escalation │

│ ▶ YOUR AI SAFETY PLATFORM │

├──────────────────────────────────────────────┤

│ ENTERPRISE AI GOVERNANCE & RISK │

│ • Policies • Controls • EU AI Act • Audit │

│ ▶ OneTrust

├──────────────────────────────────────────────┤

│ MODEL GOVERNANCE & EXPLAINABILITY │

│ • Lineage • Bias • Explainability │

│ ▶ Dataiku

├──────────────────────────────────────────────┤

│ AI / ML / LLM OPERATIONS │

│ • Build • Deploy • Monitor │

│ ▶ Dataiku │

├──────────────────────────────────────────────┤

│ DATA & COMPUTE FOUNDATION │

│ • Cloud • Data platforms • GPUs │

│ ▶ Enterprise stack │

└──────────────────────────────────────────────┘

The AI Safety Stack is a layered architecture. Each layer addresses a distinct class of risk and responsibility. Critically, safety emerges not from any single layer, but from the interaction and reinforcement across all layers.

Layered View:

————————————————–

L7: Human & Societal Impact

L6: Independent AI Assurance & Certification

L5: Runtime & Agentic Safety

L4: Enterprise AI Governance & Risk

L3: Model Governance & Explainability

L2: AI / ML / LLM Operations

L1: Data & Compute Foundation

————————————————–

Layer 1: Data & Compute Foundation

This layer consists of cloud infrastructure, compute accelerators, data platforms, and ingestion pipelines. Risks at this layer include data poisoning, leakage, bias embedded in historical data, and infrastructure misconfiguration. While foundational, this layer does not determine safety outcomes on its own—it merely supplies capability.

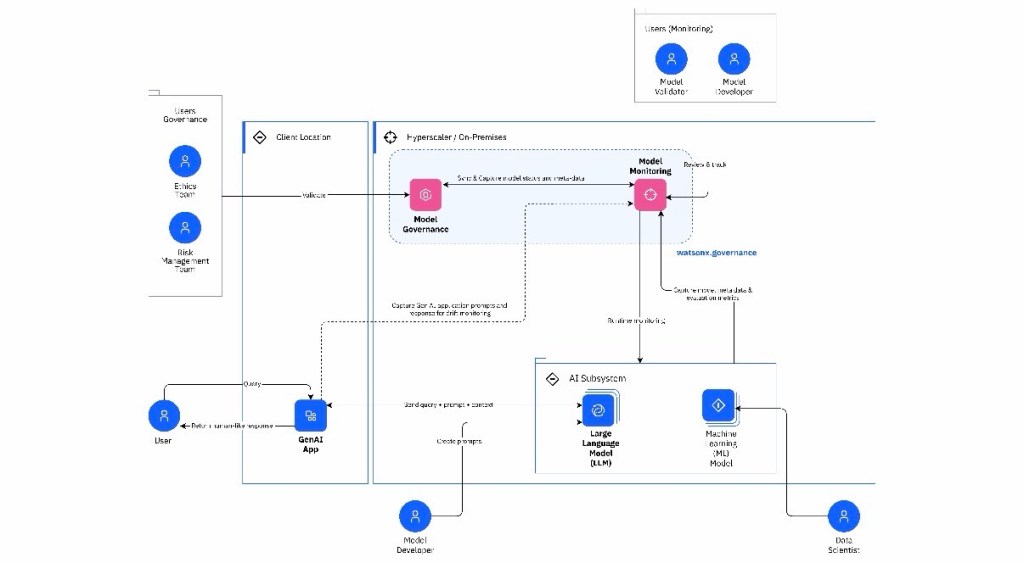

Layer 2: AI / ML / LLM Operations

Here, models and agents are trained, deployed, and monitored. Pipelines, orchestration, and operational metrics dominate. Safety is typically interpreted as performance stability and reliability. However, correctness does not imply safety, particularly when systems are allowed to act autonomously.

Layer 3: Model Governance & Explainability

This layer introduces transparency through lineage tracking, explainability techniques, bias measurement, and approval workflows. It enables retrospective understanding of model decisions but is inherently limited in preventing emergent or multi-agent behaviours.

Layer 4: Enterprise AI Governance & Risk

Enterprise governance introduces policies, risk classification, regulatory alignment, and auditability. This layer ensures procedural accountability and compliance. However, compliance alone does not equate to real-world safety, especially under dynamic conditions.

Layer 5: Runtime & Agentic Safety

This is where current architectures are weakest. Runtime safety addresses live agent behaviour, autonomy boundaries, goal drift, escalation protocols, and interaction with humans and other agents. Safety here must be continuous, contextual, and adaptive.

Layer 6: Independent AI Assurance & Certification

This layer provides independent testing, red-teaming, stress-testing, and formal certification. Independence is essential to avoid conflicts of interest. This mirrors assurance models in aviation, finance, and healthcare.

Layer 7: Human & Societal Impact

At the highest layer, safety is defined in human terms: autonomy, dignity, trust, and systemic impact. This layer asks not whether a system can function, but whether it should exist, act, or scale. Human Experience (HX) becomes the ultimate safety metric.

Conclusion

The AI Safety Stack reveals a structural gap in today’s AI ecosystems. Without independent, runtime, and human-centric safety layers, agentic AI systems will outpace existing controls. A dedicated AI safety control plane is not optional—it is inevitable.

“Today’s platforms stop at building and governing AI.

Our platform provides the missing independent safety and assurance layer — especially critical as AI becomes agentic.”

Appendix A: What Breaks When AI Becomes Agentic

When AI systems become agentic, several assumptions embedded in current governance and ML practices fail:

– Static risk assessments cannot capture dynamic goal drift

– Model-level explainability cannot explain multi-step agent plans

– Policy controls cannot constrain autonomous tool execution

– Human-in-the-loop becomes impractical at scale

– Accountability becomes ambiguous across agent chains

Agentic AI requires runtime supervision, behavioural constraints, and independent assurance.

Appendix B: Regulatory Mapping (High-Level)

The AI Safety Stack aligns naturally with emerging regulatory frameworks:

• EU AI Act:

– Risk classification and governance → Layer 4

– Post-market monitoring → Layer 5

– Human oversight and fundamental rights → Layer 7

• MAS AI Verify:

– Transparency and explainability → Layer 3

– Accountability and governance → Layer 4

– Robustness and monitoring → Layers 5 and 6

This mapping highlights that regulation implicitly assumes safety layers that most enterprises do not yet possess.

Rethinking AI Safety ☣️ in the Age of Agentic Systems

Agentic AI can plan, adapt, and act—autonomously. Legacy safety frameworks won’t cut it. We need new tools for new minds.

Rethinking Safety in the Age of Agentic AI: New Minds, New Risks, New Rules: https://lnkd.in/gccUntBs

🔗Part 1: https://lnkd.in/gP_5HzP4

🔗Part 2: https://lnkd.in/gtq_bDJs

🔗Part 3: https://lnkd.in/gqpKwC-R

🔗Part 4: https://lnkd.in/gterfz-w

🔗 https://lnkd.in/gJzwNJDT (The Dark Side of Agentic AI)

Agentic AI Safety Essentials: From Theory to Enterprise Practice

🔗https://lnkd.in/g8eXzTwz

Conclusion

The AI Safety Stack exposes a structural gap in today’s AI ecosystems. Building and governing AI are no longer sufficient. Agentic systems require independent, runtime, and human-centric safety layers. A dedicated AI safety control plane is not optional—it is inevitable.

Leave a comment