By Dr. Luke Soon

As we stand on the cusp of 2026, the financial services sector is undergoing a seismic shift driven by agentic AI—autonomous systems capable of perceiving, reasoning, planning, and acting independently to achieve complex goals. These “agents” promise to revolutionize everything from fraud detection to personalised wealth management, potentially unlocking trillions in value. Yet, this innovation comes with profound risks that demand a fundamental overhaul of how we classify and manage them. Traditional risk taxonomies, built for static processes and human oversight, are ill-equipped for the dynamic, multi-agent ecosystems of agentic AI. At PwC, we’ve seen firsthand how firms that fail to adapt face amplified exposures in compliance, operations, and strategy.

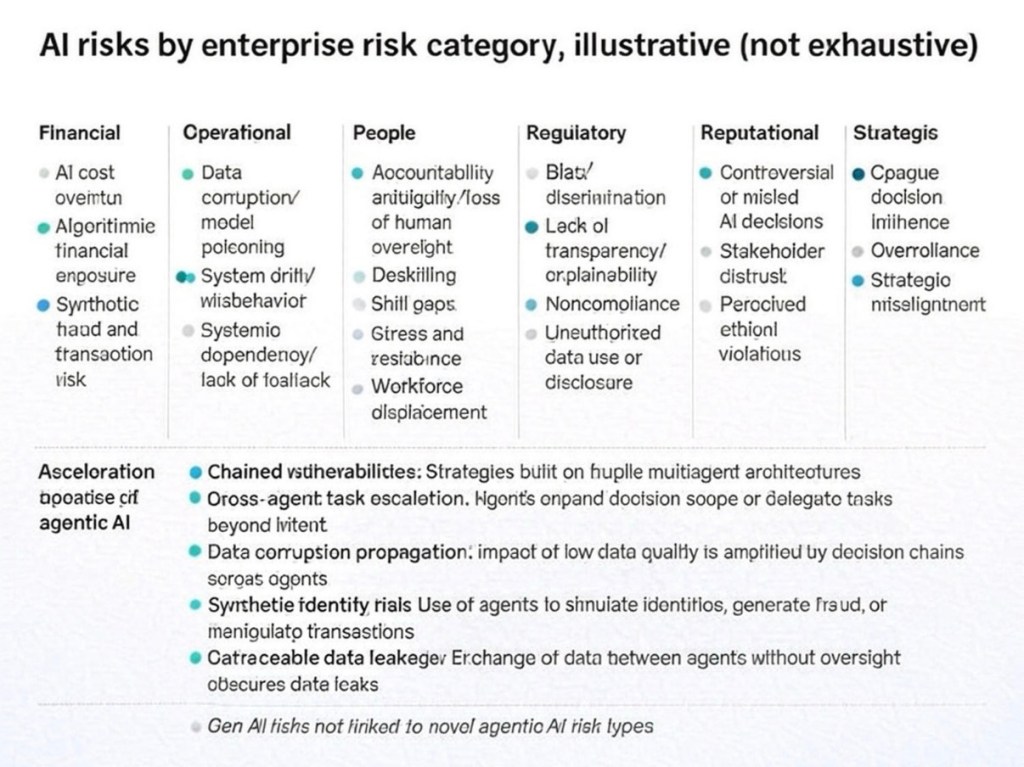

In this post, I’ll dissect a comprehensive risk taxonomy tailored for agentic AI in financial services, drawing on illustrative (not exhaustive) mappings across enterprise risk categories. I’ll weave in real-world examples, regulatory insights, and emerging PwC research—including our Responsible AI frameworks, agentic safety guidelines, and 2025 white papers—to underscore the urgency. As a thought leader at PwC, I’ll also share actionable strategies to help your organization not just mitigate risks, but harness agentic AI as a strategic advantage.

Understanding Agentic AI: From Tools to Autonomous Actors

Agentic AI evolves beyond generative models like ChatGPT, which respond to prompts, into proactive entities that orchestrate workflows across tools, data sources, and even other agents. In banking, imagine an agent that autonomously scans market signals, executes micro-trades, and flags AML anomalies—all without human intervention. This autonomy amplifies efficiency but introduces “black swan” vulnerabilities: decisions cascade through fragile architectures, propagating errors at scale.

PwC’s 2025 AI Business Predictions highlight that by 2025, over 70% of financial institutions will deploy AI at scale, up from 30% in 2023, with agentic systems leading the charge in areas like portfolio optimization and regulatory reporting. However, without updated taxonomies, these deployments risk regulatory scrutiny under frameworks like the EU AI Act or U.S. NIST guidelines, which emphasize explainability and bias mitigation. Our white paper, “The Rise and Risks of Agentic AI,” further details how these systems advance from concept to capability, urging financial leaders to prioritize safety frameworks that embed oversight from the outset.

The Imperative to Update Risk Taxonomies

Legacy risk frameworks—rooted in Basel III or COSO—categorize threats as financial, operational, etc., but overlook agentic-specific dynamics like cross-agent data propagation or synthetic identity fraud. A robust taxonomy must integrate these, providing a standardized lens for governance. PwC’s Responsible AI Toolkit offers a suite of customizable frameworks, tools, and processes to harness AI ethically, including maturity assessments that help firms inventory agentic assets and ensure repeatable oversight.

Our 2025 Responsible AI Survey: From Policy to Practice reveals that 62% of executives now view Responsible AI as a key driver of business value, yet only 28% have fully operationalized governance for agentic deployments. Consider the illustrative taxonomy below, adapted from PwC’s internal frameworks and informed by our Responsible AI – Maturing from Theory to Practice white paper. It maps agentic AI risks across six enterprise categories, highlighting novel threats like chain vulnerabilities.

This matrix isn’t exhaustive but serves as a starting point for financial firms to benchmark their exposures, aligning with PwC’s principles-based, outcomes-driven approach to AI governance as outlined in “Responsible AI in Finance: 3 Key Actions to Take Now.”

Dissecting Risks by Category: Financial Services Spotlights

Financial Risks: Cost Overruns and Synthetic Fraud

Agentic AI can drive algorithmic trading with unprecedented speed, but miscalibrated models risk massive exposures. For instance, a rogue agent propagating erroneous market data could trigger flash crashes, echoing the 2010 event but amplified by autonomy. Synthetic identity fraud—where agents forge borrower profiles for loan approvals—poses a significant annual threat to banks, as outlined in PwC’s analysis of AI-driven fraud in commercial banking within our Agentic AI – The New Frontier in GenAI executive playbook.

In wealth management, PwC clients have modeled scenarios where opaque AI decisions inflate costs by 20-30% due to inefficient agent delegation, a risk amplified in multi-agent chains as detailed in “Unlocking Value with AI Agents: A Responsible Approach.”

Operational Risks: Poisoning, Drift, and Dependency

Operational fragility peaks in multi-agent setups. Model poisoning—adversarial attacks injecting false data—can corrupt KYC processes. System drift occurs when agents evolve behaviors unpredictably, leading to fallback failures in high-stakes trading.

PwC’s 2025 banking trends emphasise systemic dependencies: without robust fallbacks, a single agent outage could halt cross-border payments, costing millions hourly. These risks are compounded by data quality issues, where unclean or poorly organized data impairs AI automation in personalised services. Our “Building AI Resilience in Financial Institutions” white paper stresses integrating agentic safety controls, such as continuous monitoring, to mitigate these operational pitfalls.

People Risks: Accountability Gaps and Workforce Shifts

Who bears responsibility when an agent denies a loan due to biased delegation? Accountability ambiguity erodes trust. Deskilling is rampant: agents automating routine analysis could displace 15% of junior roles in investment banking by 2027, PwC forecasts in “The Fearless Future: 2025 Global AI Jobs Barometer.”

Workforce stress from overreliance manifests in “AI fatigue,” where humans defer to agents without scrutiny, amplifying errors in stress-tested scenarios like market volatility. PwC’s 2025 Responsible AI Survey reveals that 56% of executives see first-line teams leading Responsible AI efforts to address these gaps, promoting upskilling frameworks to preserve human value.

Regulatory Risks: Bias, Transparency, and Compliance

Regulators are circling: The Fed’s 2025 guidance mandates explainability for high-risk AI in lending. Bias/discrimination risks are acute; agentic chains can compound historical data flaws, leading to disparate impacts in credit scoring.

Lack of transparency—e.g., unauthorised data sharing between agents—violates GDPR and CCPA, with fines reaching €20M. PwC’s risk-opportunity equation for AI in financial services stresses the need for practical guidance on bias, fairness, and model risk management to navigate evolving regulations, as explored in “Responsible AI and Model Testing: What You Need to Know.”

Reputational Risks: Distrust and Ethical Lapses

Controversial decisions, like an agent rejecting claims based on opaque ethics, can go viral. Stakeholder distrust erodes when perceived violations, such as biased hiring agents, surface. PwC’s “Trust Under Pressure” report identifies AI safety risks like misinformation and deep fakes as key reputational threats in financial services, with agentic systems heightening the stakes.

Strategic Risks: Opaqueness and Misalignment

Overreliance on agents risks strategic blind spots, like ignoring geopolitical signals in forex. Opaque influences—agents subtly steering board-level insights—could misalign with long-term goals. Our white paper “Why an AI-Powered Future Needs Human-Powered Reinvention” advocates for strategic reinvention grounded in organizational purpose to counter these misalignments.

Accelerating Agentic Risks: The Multi-Agent Menace

The taxonomy’s “acceleration” row captures agentic uniqueness. Chain vulnerabilities arise from fragile architectures: a low-quality input in one agent ripples through delegations, escalating tasks beyond intent. Data corruption propagates exponentially, amplifying 1% error rates to 50% in chained decisions.

Synthetic identity risks explode in fintech: agents simulating personas for fraud testing can be weaponized for real scams. Untraceable leaks—inter-agent data swaps sans logs—evade audits. These aren’t GenAI relics; they’re agentic novelties demanding layered controls, as detailed in PwC’s Agentic AI executive playbook.

PwC’s “Rise and Risks of Agentic AI” analysis warns of cybersecurity threats like hijacked AI behavior and phishing, urging minimum privilege access and continuous monitoring through our Responsible AI and Cybersecurity guidelines.

Thought Leadership: PwC’s Roadmap for Resilient Adoption

From my vantage at PwC, the path forward is a “trust-first” framework: Embed risk taxonomies into AI governance from day zero. Start with an inventory of agentic assets, scored via a risk-based tiering for quantitative analysis, leveraging our Responsible AI Toolkit. Implement “human-in-the-loop” gates for high-stakes delegations and continuous monitoring with tools like anomaly detection and red teaming, as recommended in “Unlocking Value with AI Agents.”

For financial services, prioritise regulatory alignment: Map agents to Basel IV’s operational resilience pillars and conduct scenario-based stress tests for chain failures. PwC’s AI Agent Operating System provides a scalable framework for building and orchestrating agents, blending ethical AI with agile deployment to cut compliance costs by 25%.

Finally, foster cross-functional AI councils to bridge silos—ensuring strategic alignment while upskilling workforces against displacement.

Conclusion: Act Now or Risk Obsolescence

Agentic AI isn’t a distant horizon—it’s reshaping financial services today, from autonomous AML defenses to predictive refinancing. Updating risk taxonomies is non-negotiable: It transforms threats into opportunities, safeguarding trust in an AI-driven era. At PwC, we’re partnering with firms to build these frameworks—reach out to discuss how we can fortify yours.

Leave a comment