By Dr Luke Soon | November 2025

1. Introduction: Why Memory Matters in Agentic AI

As artificial intelligence evolves from static models to agentic systems — autonomous, goal-driven entities that plan, reason, and act — the concept of memory becomes central to intelligence itself. Traditional LLMs (Large Language Models) excel in language generation but are fundamentally stateless. They operate within the limits of a prompt window, without persistent awareness of prior actions, user history, or environmental feedback.

Agentic AI changes this paradigm. It introduces layered memory systems that persist across time, contexts, and agents — allowing continuity, learning, and adaptation. These memory systems enable autonomous orchestration, multi-agent collaboration, and contextual recall — turning reactive models into proactive cognitive ecosystems.

This marks the shift from Generative AI (GenAI) to Agentic AI (AgAI) — from prediction to participation, from information to intelligence.

2. The Six Pillars of Memory in Agentic Systems

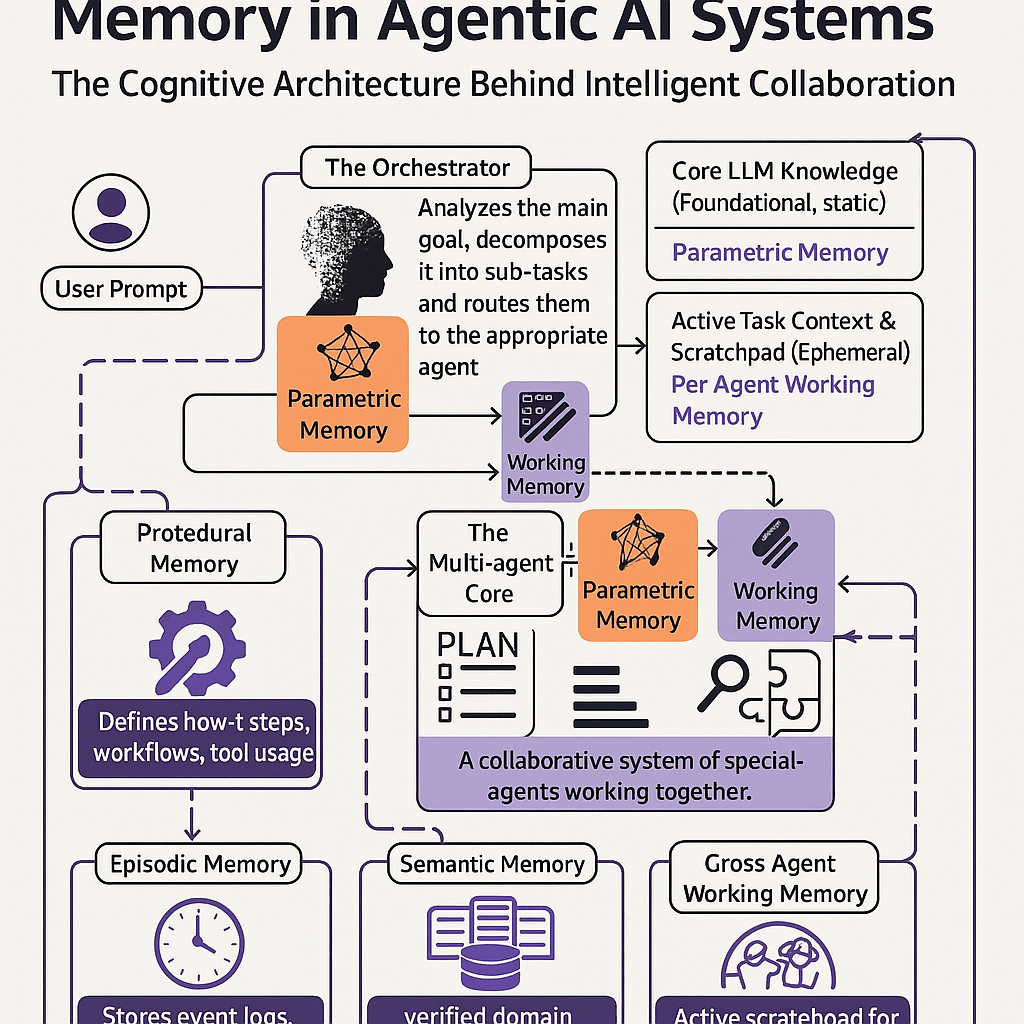

Agentic AI frameworks often integrate six complementary memory subsystems, each analogous to functions in human cognition.

Each component functions independently yet interacts through an orchestrator — a meta-agent responsible for decomposition, planning, and coordination.

3. The Orchestrator: Cognitive Control in Agentic Architectures

At the top sits the Orchestrator, which interprets the user’s prompt, breaks it into subtasks, and routes them to appropriate specialised agents. It maintains:

Parametric Memory for foundational LLM knowledge, Working Memory for live context (ephemeral scratchpad), and Procedural Memory for rules governing how subtasks are executed.

This mirrors the central executive function in the human brain’s prefrontal cortex — integrating sensory, semantic, and episodic cues to manage complex behaviours.

Recent research from Stanford’s Center for Research on Foundation Models (Bommasani et al., 2023) and OpenAI’s “Planning-Capable Language Models” (2024) highlights that effective orchestration demands not just reasoning but memory routing — dynamically determining which type of memory to invoke per task.

4. Multi-Agent Core: Collaboration Through Memory

The Multi-Agent Core enables multiple AI agents — planners, retrievers, reasoners, verifiers — to collaborate within a shared memory substrate. This architecture draws parallels to distributed cognition frameworks described in MIT CSAIL’s Agent-Based Cognitive Systems (Krakauer et al., 2024).

Agents access:

Procedural Memory for task flow templates (e.g., retrieval-augmented generation, tool use), Episodic Memory for context continuity (past user intents, prior task outcomes), and Semantic Memory for trusted factual grounding (knowledge graphs, APIs, or policy repositories).

This distributed memory exchange avoids hallucinations, improves interpretability, and enhances accountability — key principles of Agentic Safety (PwC Responsible AI Research, 2025).

5. Procedural Memory: The Engine of Action

Procedural memory encodes “how to do” — workflows, tools, and sequences of actions. It enables reusable automation.

For instance, a financial-report-generation agent can retrieve a stored workflow that defines:

Authenticate with data warehouse → Extract quarterly metrics → Apply XBRL validation → Generate insights → Push to compliance dashboard.

This procedural continuity is what transforms an LLM from “one-shot reasoning” to sustained autonomous execution.

Relevant research:

Microsoft Research, AutoGen: Enabling Next-Gen Multi-Agent Systems (Wu et al., 2023) Google DeepMind, Adaptive Agentic Systems with Tool Learning (2024)

6. Episodic and Semantic Memory: Learning from Experience

Episodic Memory records event logs, actions, and results. When combined with Semantic Memory, it forms a hybrid learning loop — the system not only recalls what happened but understands why it happened.

Episodic traces can be time-stamped and weighted (success/failure scores), feeding reinforcement loops. Semantic repositories, meanwhile, anchor responses in verifiable truth — essential for governance and compliance.

Notable frameworks include:

Anthropic’s Constitutional AI and Reflective Memory (Bai et al., 2023) Meta AI’s MemGPT: A Memory-Augmented Transformer Framework (Peng et al., 2024) IBM’s Neuro-Symbolic Integration for Trustworthy AI (2024)

7. Cross-Agent Working Memory: The Neural Bus of Collaboration

Perhaps the most innovative layer is Cross-Agent Working Memory — an active scratchpad where multiple agents synchronise task context, share variables, and resolve conflicts.

This concept aligns with the Global Workspace Theory in cognitive science (Baars et al., 2019) and shared latent space coordination models seen in OpenAI’s Swarm Framework (2024).

Such architectures allow agents to function like neurons in a collective brain — individually intelligent yet socially aware.

8. Memory as the Foundation of Trust and Accountability

In Agentic AI governance, memory is not merely a performance enabler — it is a trust mechanism. It supports:

Traceability: reconstructing decision pathways from episodic logs. Accountability: aligning procedural steps to regulatory policies. Ethical Guardrails: embedding human-in-the-loop checkpoints via semantic validation.

This ties directly to the principles in:

EU AI Act (2024) — requiring explainability and data provenance. Singapore AI Verify Framework (IMDA, 2025) — defining transparent audit trails for agentic systems. UK AI Safety Institute Reports (2025) — emphasising “memory integrity” as a safeguard for emergent behaviour.

9. Looking Forward: From Memory Systems to Self-Reflective Agents

Next-generation Agentic AI will blend symbolic reasoning, sub-symbolic learning, and meta-cognition — enabling agents to reflect on their own memories and modify behaviours accordingly.

The future state is a self-reflective, self-correcting system that learns dynamically, maintains continuity, and embodies ethical alignment — an architecture where memory becomes consciousness in code.

10. References

Bommasani, R. et al. (2023). Foundation Models in the Era of Memory. Stanford CRFM. Wu, T. et al. (2023). AutoGen: Enabling Next-Gen Multi-Agent Systems. Microsoft Research. Peng, Z. et al. (2024). MemGPT: Memory-Augmented Transformer Framework. Meta AI. Bai, Y. et al. (2023). Constitutional AI and Reflective Memory. Anthropic Research. DeepMind Research (2024). Adaptive Agentic Systems with Tool Learning. Krakauer, D. et al. (2024). Distributed Cognition in Multi-Agent Architectures. MIT CSAIL. PwC Research (2025). Agentic Safety: Memory, Alignment and Accountability. Baars, B. et al. (2019). Global Workspace Theory: A Cognitive Framework for Conscious Agents. European Union (2024). AI Act – Title IV: Transparency and Accountability. IMDA Singapore (2025). AI Verify Foundation Framework. UK AI Safety Institute (2025). Agentic Safety and Memory Integrity Guidelines.

Leave a comment