By Dr Luke Soon

1. The Agentic Shift: From Outputs to Outcomes

After years of “AI hype,” 2025 marks a turning point.

According to Google Cloud’s ROI of AI 2025 report, 88% of early adopters of Agentic AI are already seeing positive ROI across productivity, customer experience, sales, and marketing. What differentiates these organisations isn’t just the use of GenAI tools — it’s their ability to embed autonomous agents that act, decide, and coordinate under human intent and governance.

This is the transition from “assistive intelligence” to “agentic intelligence.”

Where Generative AI answers a question, Agentic AI executes a mission.

Early agentic adopters — especially in financial services — are using multi-agent systems to independently perform due diligence, execute trades, verify compliance, and manage personalised client journeys.

This marks the second wave of AI transformation: one not driven by productivity dashboards, but by autonomous execution under trust constraints.

2. The Agentic AI Universe: A System of Systems

Machine Learning: Foundation models for prediction, regression, and optimisation. Deep Learning / LLMs: Pretrained architectures (Transformers, RNNs) enabling language understanding and multimodal reasoning. Generative AI: Text, image, and speech synthesis; copilots; chatbots; and retrieval-augmented generation (RAG). AI Agents: Frameworks such as LangGraph, CrewAI, and AutoGen that coordinate multiple models with memory, goal chaining, and tool calling. Agentic AI: The frontier — self-improving, role-based, self-healing agents with embedded safety, explainability, and governance.

In financial services, this is already visible in production.

For instance:

UBS is using multi-agent risk evaluators to cross-check climate stress tests against regional regulatory thresholds.

OCBC Bank has deployed agentic workflow engines to orchestrate Know-Your-Customer (KYC) processes across internal APIs, improving resolution time by over 40%.

HSBC is experimenting with memory-enabled credit evaluation agents that learn from past loan decisions while maintaining audit logs for compliance review.

These examples demonstrate that the agentic frontier is not theoretical — it is operational, measurable, and ROI-positive.

3. Beyond Guardrails: The Rise of the Agentic Safety Platform

As agents gain autonomy, control must evolve.

Traditional AI governance focuses on static guardrails — model explainability, fairness, bias testing, and periodic audits. But Agentic AI operates in continuous loops of reasoning and action, requiring real-time alignment and observability.

This is where an Agentic Safety Platfform (ASP) enters.

It introduces a new infrastructure layer focused on:

Chain-of-Thought (CoT) Safety: Preventing runaway reasoning loops by monitoring deliberative steps in real time. Memory Leak Prevention: Ensuring agents don’t accumulate or misuse long-term memory beyond policy scope. Run-time Safety & Autonomy Limits: Embedding behavioural boundaries in agentic protocols (e.g., LangGraph, OpenAPI schemas). Trust, Explainability & Alignment (Observability): Providing mission-level oversight — controlling purpose, not process.

This model shifts control from static rules to dynamic supervision — from “Did the model comply?” to “Is the agent acting within intent, safely and transparently, right now?”

In banking, this translates into:

CoT Monitoring of reasoning steps in loan underwriting or credit scoring. Run-time audit dashboards that track each agent’s decision lineage. Memory segmentation controls to prevent cross-client data leakage. Autonomy throttling — adjusting decision latitude based on confidence levels and materiality thresholds.

4. Why Financial Services Lead the Agentic Curve

Banks operate within the tightest risk, compliance, and regulatory frameworks. Yet paradoxically, they are also the first to deploy safe autonomy.

According to Google’s 2025 data, 56% of financial institutions already use AI agents, with top use cases spanning:

Customer service & onboarding (57%) Security operations & fraud detection (46%) Product innovation & portfolio optimisation (46%) Finance & accounting automation (51%)

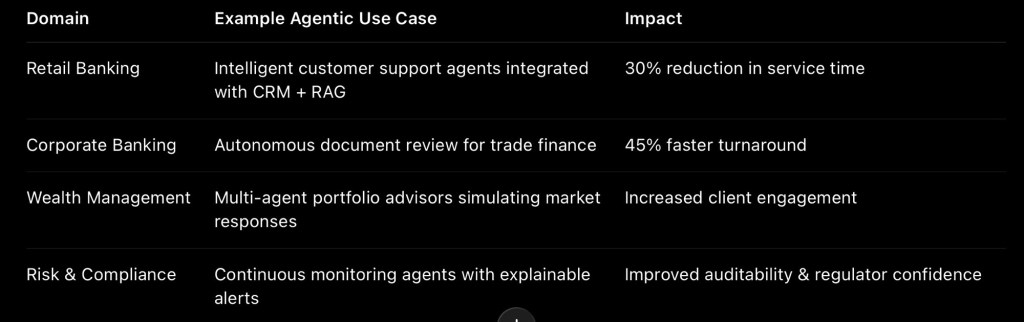

We are seeing Agentic Orchestration Loops emerge across key value chains in Asia:

These systems operate under human oversight — but not human bottlenecks.

The next competitive advantage lies in real-time, role-based, and explainable autonomy — the ability to let AI act, safely, within mission constraints.

5. The New ROI Equation: Trust x Autonomy

Google’s findings are clear — agentic maturity correlates directly with financial ROI.

Early adopters allocate >50% of their AI budgets to agents and see up to 10% annual revenue increases and 3–6 month time-to-value cycles.

But ROI now includes a new multiplier: Trust.

I define it as the Agentic Safety Multiplier —

ROI = (Productivity Gains + New Value Creation) × Trust Coefficient

Where the Trust Coefficient reflects your system’s explainability, containment, observability, and compliance readiness.

An unsafe agent may deliver short-term gains, but in regulated sectors, it erodes institutional trust — and therefore long-term ROI.

6. A Future of Co-Intelligence

Agentic AI represents more than automation.

It is the beginning of co-intelligence: humans and machines sharing goals, not just tasks.

In my book Genesis: Human Experience in the Age of AI, I describe this as the merging of Human Experience (HX = CX + EX) with Artificial Agency (AA) — a synthesis of emotional intelligence and operational autonomy.

Financial institutions that harness this synergy — embedding agentic safety, ethical alignment, and human oversight — will redefine trust itself as a currency.

7. Closing Thoughts: Governing Purpose, Not Process

As Agentic AI matures, governance must shift from micromanaging every model’s action to ensuring every agent’s purpose aligns with the enterprise mission.

The future belongs to those who can govern intent, monitor reasoning, and measure trust as first-class metrics — not compliance afterthoughts.

Banks that achieve this will not only lead the next AI ROI cycle; they will set the global benchmark for responsible autonomy.

References

Google Cloud, The ROI of AI 2025

PwC Agentic Safety Platform Research, 2025 Genesis: Human Experience in the Age of AI (Soon, 2022) MIT Sloan Management Review, “Superhuman Workforce,” 2024 Stanford HAI, “Trustworthy AI Systems,” 2024 WEF, “AI Governance for Autonomous Systems,” 2025

Leave a comment