Dr Luke Soon

From Generative to Agentic: The Evolution of AI Intelligence

In 2025, the conversation around AI has shifted dramatically—from generative capability to agentic orchestration. We are now entering the third epoch of AI evolution: systems that can perceive, reason, plan, and act autonomously under human-defined guardrails.

Recent research by Google Cloud (The ROI of AI 2025) shows that 88% of early adopters of Agentic AI are now seeing positive ROI, particularly across financial services, marketing, and customer experience . The rise of AI agents marks a shift from “AI as a tool” to “AI as a workforce multiplier.”

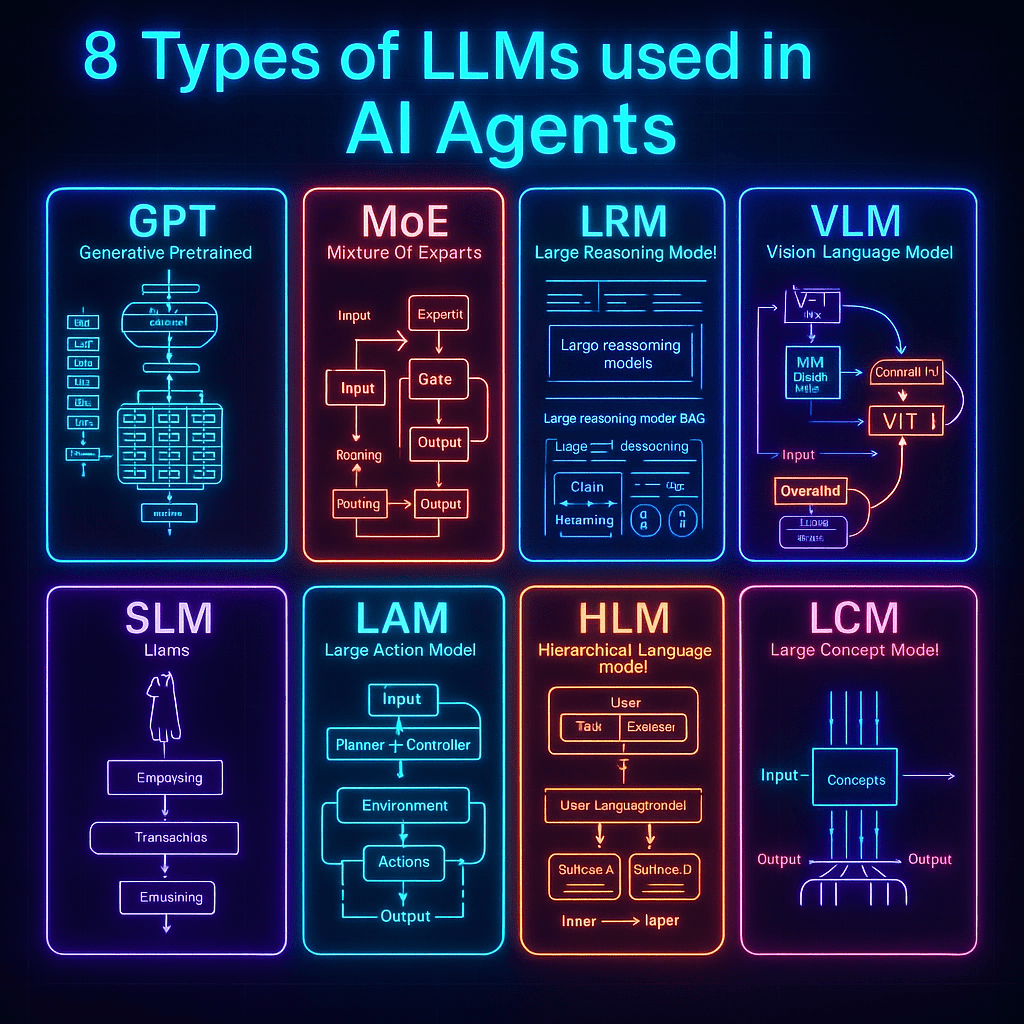

But powering this new agentic world are eight distinct types of Large Language Models (LLMs) — each specialising in unique cognitive functions that together form the architecture of autonomous systems.

1. GPT – Generative Pretrained Transformer

The GPT family remains the foundational brain for most agentic systems. These models excel at natural language understanding and generation.

In financial services, GPT-based copilots are now embedded across risk reporting, marketing compliance checks, and customer engagement workflows.

Example: J.P. Morgan’s COiN platform leverages GPT-like architectures to parse 12,000 commercial loan agreements in seconds, extracting key clauses and risk indicators. ROI impact: 39% of executives reported measurable productivity ROI from individual GenAI use cases .

2. MoE – Mixture of Experts

The Mixture of Experts model introduces modularity and scalability by routing queries to specialised sub-networks (“experts”). This design underpins systems such as Gemini 1.5 Pro and Mixtral 8x7B.

Financial example: Tier-1 insurers now use MoE architectures for multi-domain tasks — underwriting, fraud detection, and claims triage — where each expert module is fine-tuned for a domain-specific dataset. Benefit: Lower compute costs and improved specialisation without exponential model growth.

3. LRM – Large Reasoning Model

The next frontier of intelligence lies in reasoning. LRMs integrate symbolic reasoning, retrieval-augmented generation (RAG), and chain-of-thought planning.

They represent the first generation of “thinking” models—vital for AI agents tasked with multi-step decision-making.

Financial use case: Investment banks deploy LRMs to generate explainable credit decisions and automate structured product documentation. Research reference: DeepMind’s AlphaGeometry and Anthropic’s Claude 3.5 Opus both demonstrate emergent reasoning capacities that mimic human deliberation.

4. VLM – Vision-Language Model

VLMs unify vision and text, allowing agents to see, read, and interpret.

In asset management and insurance, these models power document understanding agents—automatically reading KYC documents, financial statements, and scanned PDFs.

Example: HSBC’s AI agent uses multimodal reasoning to flag anomalies in trade finance documents, combining OCR, NLP, and policy reasoning layers. Frameworks: OpenAI’s GPT-4V and Qwen-VL show how multimodal grounding is becoming a baseline for financial compliance tools.

5. SLM – Small Language Model

SLMs are lightweight, private, and deployable on-premises or at the edge. They represent the “trusted micro-agents” operating within secured financial environments.

Use case: A Singaporean retail bank runs SLMs to handle low-risk, repetitive internal queries (e.g., HR, policy lookup) entirely offline, preserving data sovereignty. Trend: As data privacy remains the top concern for 37% of enterprises , SLMs offer a pragmatic alternative to cloud LLMs for regulated sectors.

6. LAM – Large Action Model

LAMs bridge the cognitive-to-actuation gap — enabling AI to not only reason but also perform actions via APIs and tools.

These models sit at the heart of agentic orchestration frameworks like AutoGPT, AgentOS, and LangGraph.

Financial use case: A trading operations agent using a LAM can retrieve data, analyse portfolio exposure, and automatically execute hedging actions — under human approval. Industry example: Bloomberg’s internal LAM prototype integrates task execution pipelines within their terminal ecosystem.

7. HLM – Hierarchical Language Model

HLMs embody multi-layered cognition, mirroring human organisations.

They orchestrate manager and worker agents, delegating subtasks to specialised sub-agents.

Example: In anti-money-laundering (AML) investigations, an HLM supervises sub-agents: one for transaction graph analysis, one for entity risk scoring, and one for drafting regulatory reports. Research roots: Stanford’s CAMEL Framework and Microsoft’s AutoGen show the early emergence of hierarchical multi-agent coordination.

8. LCM – Large Concept Model

LCMs represent the semantic layer of abstraction—models that learn concepts instead of just tokens.

This is critical for aligning AI reasoning with human values, ethics, and strategic foresight—the foundation of what I call Trust-Based Transformation.

In finance: LCMs could serve as governance or ethics co-pilots, translating regulatory intentions (e.g. MAS AI governance frameworks) into operational constraints for AI agents. Philosophical alignment: Fei-Fei Li’s work on human-centred AI and Geoffrey Hinton’s “concept neurons” research underpin this transition toward interpretable concept learning.

Financial Services: The Perfect Testbed for Agentic Intelligence

According to Google’s 2025 survey, 56% of financial institutions have deployed AI agents in production —from compliance automation to portfolio personalisation.

Banks such as Commerzbank and Deutsche Bank report measurable gains from AI-driven customer experience and cybersecurity automation.

Top use cases across financial services:

Customer service & experience (57%) – multimodal agents resolving client issues with empathy and precision. Finance & accounting (51%) – autonomous reconciliation, variance analysis, and forecasting. Security operations (46%) – AI agents detecting fraud and insider threats 24/7 .

The outcome: AI agents in finance are driving a 6–10% revenue uplift and a 727% ROI over three years in some implementations .

Building the Agentic Enterprise: Human Experience (HX) as the True ROI

As I wrote in Genesis, “technology becomes meaningful only when it enhances the human experience.”

The ROI of Agentic AI isn’t measured solely in cost savings or speed—it’s in trust, empowerment, and human amplification.

To harness these eight LLM types effectively, organisations must:

Invest in C-suite sponsorship and purpose alignment. 78% of firms with executive backing see ROI on at least one GenAI use case . Embed HX = CX + EX frameworks — ensuring both customer and employee experiences are elevated through AI augmentation. Adopt data governance and Responsible AI principles from the start — as emphasised in Singapore’s AI Verify and PwC’s Trustworthy AI frameworks. Orchestrate diverse model types (GPT, LAM, LCM, etc.) to form hybrid AI agents that combine reasoning, action, and ethics.

Conclusion: The Future is Hybrid and Human-Aligned

The taxonomy of LLMs is not merely a technical catalogue — it is the neural architecture of tomorrow’s economies.

Financial services, being data-dense, risk-sensitive, and regulation-heavy, will continue to lead the experimentation frontier.

As AI agents evolve from assistants to autonomous collaborators, the organisations that thrive will be those that treat intelligence as a team sport — where humans, models, and machines co-create value in trust.

References

Google Cloud & NRG (2025). The ROI of AI 2025: How Agents Are Unlocking the Next Wave of AI-Driven Business Value. IDC (2025). The Business Value of Google Cloud Generative AI. Forrester (2025). Total Economic Impact™ of Google SecOps. Stanford HAI (2024). The Rise of Hierarchical AI Systems. DeepMind (2024). Large Reasoning Models (LRMs) and the Future of Cognitive AI. PwC (2025). AI Jobs Barometer & Agentic Enterprise Playbook. Fei-Fei Li (2023). The Worlds I See: Human-Centred AI.

Leave a comment