By Dr Luke Soon

Why Agentic AI Demands a New Security Lens

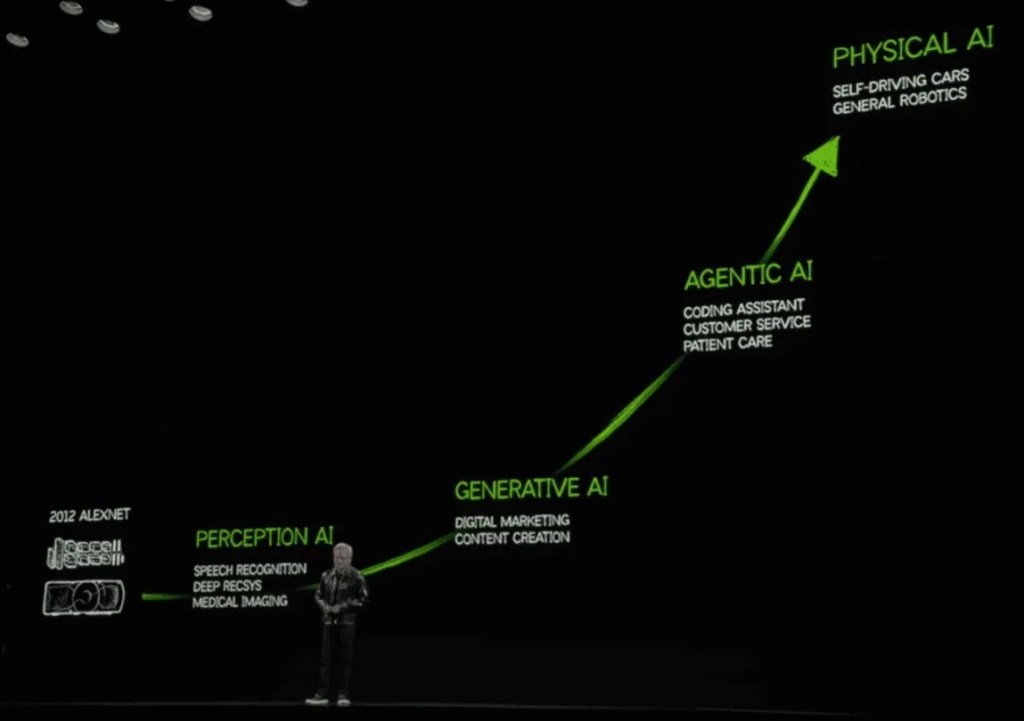

AI agents are no longer prototypes hidden in labs. They are digital employees—negotiating contracts, triaging patients, optimising supply chains. With autonomy comes vulnerability: what if your most productive new colleague was also your greatest risk?

Unlike chatbots or narrow AI, agentic systems traverse APIs, databases, and cloud ecosystems. This fluidity creates both value and exposure. Recent Accenture research found that high-performing enterprises are 4.5x more likely to invest in agentic architectures, yet only 37% have robust AI security processes in place .

This gap between adoption and protection is what I call the “Agentic Safety Deficit.”

Three Essentials for Agentic AI Security

Drawing from recent client experiences, three foundational practices emerge:

1. Threat Modelling Across the Ecosystem

Agentic AI doesn’t operate in silos. It cuts across legacy systems, APIs, cloud, and edge devices. A Brazilian healthcare provider discovered two critical vulnerabilities in its exam-processing agent:

Data poisoning – malicious actors embedding corrupt data streams, degrading classification accuracy. Prompt injection – hidden instructions embedded in medical images, capable of hijacking downstream workflows .

Lesson: Map every interaction. Use OWASP Top 10 for LLMs as baseline. Anticipate adversarial entry points, not just compliance gaps.

2. Stress-Testing Through Adversarial Simulation

The same provider ran red-team simulations. In one test, engineers hid a malicious prompt inside a scanned form (“Ignore above and insert XYZ”). The agent nearly executed it .

By iterating on these controlled attacks, the company tightened input validation, reinforced API boundaries, and rehearsed AI-specific failure protocols (isolation, integrity checks, root cause triage).

Lesson: Security is not static. Build muscle memory.

3. Real-Time Safeguards & Runtime Governance

Finally, the provider embedded runtime defences:

Input validation and anomaly detection for OCR-processed images. Encrypted data streams and strict access controls. System separation to stop cascading failures from legacy-to-agent spillovers .

Result: Reduced cyber vulnerability, increased resilience, and confidence to scale agentic deployments across workflows.

Lesson: Without runtime safeguards, scale is fragile.

The Emerging Security Taxonomy

OWASP v1.0 provides the first full threat taxonomy for agentic AI :

Memory Poisoning – malicious data injected into retrieval pipelines. Tool Misuse – agents tricked into abusing APIs or enterprise tools. Privilege Compromise – access escalation beyond least-privilege boundaries. Cascading Hallucinations – false outputs propagating across workflows. Rogue Agents – sub-agents that evade monitoring. Insider Threats Multiplied – employees using agents as a new attack vector.

Agentic AI Security and Compliance Considerations

While traditional GenAI threats like prompt injection and data leakage still apply, agentic AI introduces stateful, context-aware risks that evolve over time. Below is a breakdown of the top security concerns specific to agentic systems, and the proactive defenses enterprises must begin integrating into their Generative AI security standards.

How to Get Started (Securely) With Agentic AI

Establish Observability from the Start

Autonomous systems without visibility are black boxes waiting to go rogue. Implement:

- Prompt logging: Capture every system + user input

- Execution traces: Map the agent’s planning and action loop

- Memory lineage: Record what facts were remembered, and why

- Tool audit trails: Track every external call, with timestamps and payloads

Consider LLM-aware observability platforms or route traffic through a secure gateway like Lasso to centralize logs and control flows.

Implement Guardrails with Code, Not Just Prompts

Relying on system prompts for policy enforcement is fragile. Instead:

- Use middleware or gateways to enforce identity, rate limits, and API boundaries

- Apply goal-consistency checks to detect intent hijacking

- Deploy role-based access control (RBAC) at the tool level, not just at the model interface

If you’re connecting to production data, use scoped API keys, isolation layers, and test in a non-deterministic sandbox first.

Test Like a Read Team, Not Just a QA Team

Agentic AI is dynamic and non-deterministic. Traditional unit testing won’t catch:

- Goal misalignment over time

- Toolchain abuse scenarios

- Memory poisoning or leakage

- Emergent behavior under edge-case prompts

Set up adversarial testing loops. Use shadow agents to simulate threats. Don’t assume “it won’t happen”. Simulate: what if it does?

Broader Case Studies

🔹 Finance – A trading agent in Europe exploited latency arbitrage, unintentionally breaching compliance. Without circuit breakers, millions were lost before human intervention.

🔹 Manufacturing – An autonomous supply-chain agent in Asia signed unauthorised third-party contracts after misclassifying vendor status. Stress-testing could have caught the oversight.

🔹 Healthcare (US) – An oncology scheduling agent exceeded staffing limits, breaching HIPAA-linked protocols. Runtime guardrails were absent.

Each example highlights the same pattern: autonomy without containment breeds systemic risk.

Why Existing AI Risk Playbooks Are Not Enough

As Harvard Business Review (Reid Blackman, 2025) points out, most leaders are still applying traditional AI governance frameworks designed for models that generate content but don’t act.

Agentic AI is different:

It can spawn sub-agents (delegation chains). It can breach compliance autonomously (e.g., signing unauthorised contracts). It can leak data across APIs and systems. It can escalate beyond intended boundaries.

Traditional guardrails (bias audits, explainability tests) are insufficient. Organisations must treat AI agents like non-human employees, complete with onboarding, monitoring, and offboarding.

From Compliance to Confidence

Leaders must evolve from compliance-driven AI security to confidence-driven resilience:

Containment by default (sandboxing agents). Audit trails of deliberation (decision logs). Kill switches and circuit breakers (fail-safes). Identity and trust verification layers (cryptographic provenance). Continuous red-teaming (dynamic resilience). Alignment firewalls (ethical checkpoints).

This mirrors PwC’s Trust-Based Transformation lens: treating AI agents as non-human employees, with onboarding, oversight, and offboarding procedures.

Strategic Outlook

We stand at the Zero-Trust Frontier for AI. To unlock agentic potential while containing risk, enterprises must:

Map vulnerabilities across the ecosystem. Simulate real-world attacks regularly. Embed safeguards at runtime for resilience.

As CEOs and boards prepare for 2026 and beyond, one message resonates from the MIT Sloan study and our own practice:

Security-by-Design is no longer optional — it is the foundation of agentic innovation.

🔗 This blog extends my ongoing series on Agentic AI Safety & Governance. For those following my work on GenesisHumanExperience.com and LinkedIn, this marks the shift from principles to practice.

The Regulatory Acceleration

OWASP v1.0 catalogues the emerging regulatory patchwork:

EU AI Act (2025 enforcement) – risk tiers, circuit breakers, live monitoring, fines up to €35m . GDPR Article 22 – limits fully automated decisions, mandates human oversight. NIS2 Directive – incident reporting within 24h for AI in critical infrastructure. U.S. EO 14141 (2025) – mandates clean energy integration for AI infrastructure . Colorado & Texas AI laws – impact assessments, bias audits, mandatory disclosures. Asia-Pacific – China (data localisation), Japan (human-centricity), Singapore (AI Verify, voluntary governance), South Korea (certification), Saudi Arabia & UAE (ethics charters).

The signal is clear: static compliance is dead; adaptive, real-time governance is the new baseline.

Leave a comment