As I have argued repeatedly in my recent blogs on GenesisHumanExperience.com and on LinkedIn, the age of Agentic AI is not a continuation of the Generative AI hype cycle—it is the decisive shift from passive prediction machines to autonomous, interoperable systems capable of reasoning, acting, and evolving in dynamic ecosystems.

If Generative AI was the dawn, Agentic AI is the sunrise.

In this piece, I want to unpack the technical anatomy of Agentic AI using three complementary perspectives:

The AI Agent Stack (2025), The AI Agent System Blueprint, and The Foundations of the Agentic AI Tech Stack.

These are more than a taxonomy of tools; they reflect the multi-layered engineering challenge of building safe, performant, and scalable agent ecosystems.

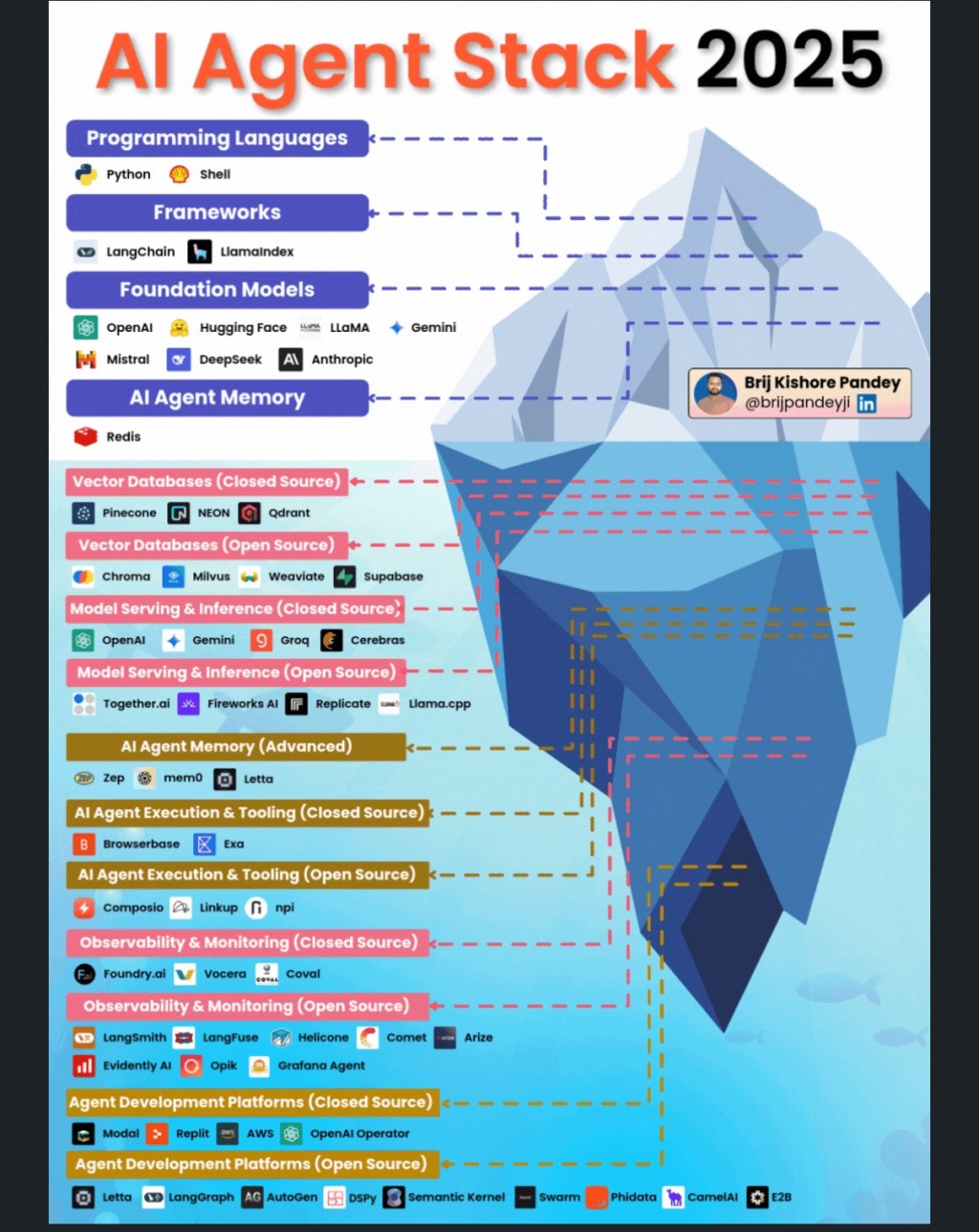

1. The AI Agent Stack (2025): From Iceberg to Infrastructure

Imagine the Agent Stack as an iceberg. What is visible above the water—programming languages (Python, Shell), frameworks (LangChain, LlamaIndex), and foundation models (OpenAI, Hugging Face, LLaMA, Gemini, Mistral, DeepSeek, Anthropic)—is just the tip.

Beneath the surface lies the critical machinery:

Memory (Redis, Zep, mem0, Letta): enabling persistence beyond single prompts, crucial for longitudinal tasks such as compliance monitoring or customer journey orchestration. Vector Databases: both closed (Pinecone, Qdrant) and open (Chroma, Weaviate, Milvus, Supabase), powering high-dimensional semantic recall. Model Serving & Inference: bridging proprietary platforms (OpenAI, Gemini, Groq, Cerebras) with open alternatives (Together.ai, Replicate, Llama.cpp). Execution & Tooling: including open-source libraries (Composio, Linkup, npm) and closed-source SaaS (Browserbase, Exa). Observability & Monitoring: ranging from closed compliance dashboards to open-source MLOps tools (LangSmith, LangFuse, Helicone, Comet, Arize, Evidently, Grafana). Agent Development Platforms: closed (AWS, Modal, Replit, OpenAI Operator) and open (LangGraph, AutoGen, Semantic Kernel, Swarm, Phidata).

Observation: This is where Agentic AI diverges from traditional software stacks—agents are not just deployed, they must be observed, scaffolded, and constantly aligned. As I’ve noted in my post “Genesis at the Fork – 2025→2050”, observability in Agentic AI will likely become a compliance category as significant as cybersecurity【WEF 2025; PwC AI Safety Index】.

2. The AI Agent System Blueprint: Controller Agents and Interoperability

Then, we have the systemic orchestration of agents. Here, we see the blueprint aligning with my “HX = CX + EX” principle (Human Experience = Customer Experience + Employee Experience), where agents serve as connective tissue between inputs, reasoning, and outputs.

Input/Output: Multi-modal ingestion (text, video, audio, images) is not trivial—each mode requires its own embedding pipelines and retrieval strategy. Orchestration: Frameworks like LangGraph and Guardrails are not “add-ons”; they are safety scaffolds preventing hallucinations, enforcing context windows, and embedding compliance rules. MCP Server Layer: This is critical. Think of it as the operating system kernel for agents, mediating between semantic/vector DBs, APIs (Stripe, Slack), and models. Data & Tools: Enterprise systems (ERP, CRM, HRIS) will converge with agent APIs, giving rise to context-aware execution. Reasoning: Where LLMs were “completion engines,” agents here are decision engines, chaining together reasoning paths (CoT, ToT, reflection). Interoperability: The future will not be single-vendor. Multi-agent protocols will be as important as TCP/IP was for the internet.

— I argue that interoperability standards for agents will bifurcate: “Commonwealth Protocols” (open, federated, interoperable) vs “Fortress Protocols” (closed, proprietary, siloed)—with profound geopolitical and economic consequences【Stanford HAI 2025; OECD AI Policy Observatory】.

3. Foundations of Agentic AI Tech Stack: Layered Architectures

Then we discuss a layered architecture resembling the OSI stack of the internet era.

Input Layer: Multi-source ingestion (Slack bots, Zapier, APIs, RSS). This layer defines how “aware” an agent can become. Foundation Models Layer: The split between user-query models (GPT-4o, Claude 3, Gemini 1.5) and multimodal models (Vision, Pro Vision, Whisper) reflects the emerging division of cognitive labour. Agents Framework Layer: Planning (CrewAI, AutoGen), memory (Pinecone, Weaviate, Sheets), reflection (self-critique prompts, AutoGen Review), and tool use (LangChain Tools, APIs). This is where agents learn to reason about themselves. Tools Integration Layer: APIs, code interpreters, error handling, connecting agents to enterprise software. This enables closed-loop execution—agents that act, fail, and self-repair. Execution Environment: Sandboxes, permissions, error handlers. This will become the equivalent of containerisation (Docker/Kubernetes) for Agentic AI. Orchestration Layer: Workflow management (LangGraph, n8n), task routing, and resource allocation. This is where autonomy meets governance. Safety Guardrails: Guardrails AI, validators, self-critique. Without these, we risk sliding into “Fortress AI,” where agents optimise for goals misaligned with human intent【Anthropic 2025; EU AI Act 2025】.

Convergence and Implications

What these stacks reveal is that Agentic AI is not a single model, nor a single framework—it is a system of systems. To build responsibly, enterprises and policymakers must address:

Observability-as-a-Service: Mandatory monitoring of reasoning traces and decision trees. Interoperability Standards: Open protocols preventing agent lock-in. Sandboxing & Permissions: Clear boundaries for execution, akin to role-based access in cybersecurity. Memory & Forgetting: Ethical frameworks around what agents remember—and what they must forget. HX Alignment: Ensuring agents amplify human purpose, not replace it.

As I argued in “AI Agents vs Agentic AI: Cutting Through the Hype”, this is where we must distinguish autonomous competence from embodied intelligence. We are not building tools—we are building digital counterparts that will increasingly shape our economies, societies, and intimate experiences.

References

PwC. AI Jobs Barometer 2025.

Stanford HAI. AI Index Report 2025.

OECD. AI Policy Observatory.

World Economic Forum.

AI Safety and Governance Frameworks 2025. Anthropic. Constitutional AI and Guardrails Research.

EU. Artificial Intelligence Act 2025.

DeepMind & OpenAI Technical Papers on Multi-Agent Orchestration.

Closing Thoughts

The Agentic AI stack is our generation’s TCP/IP moment. The question is not whether it will scale—it will. The real question is how it will be governed.

Will we architect a Commonwealth of Agents, interoperable and human-centred? Or a Fortress of Agents, siloed, extractive, and misaligned?

Leave a comment