By Dr Luke Soon – Computer Scientist, AI Ethicist

The Stakes: From Routine Turbulence to Irreversible Transition

In the coming decade, work will not simply change — it will be rewired at the atomic level. Every occupation, from clerical administration to law and tax, will be decomposed into sub-tasks that can be instantly automated, reassembled, and redistributed by AI.

This is not a forecast. It is happening already. The World Economic Forum (2025) reports that by 2029, 83 million jobs will be displaced while 69 million new ones will be created . The Stanford HAI AI Index (2025) confirms that AI adoption has already surpassed 55% of enterprises globally, with productivity gains of 30–60% in fields like coding, customer support, and finance.

The challenge is not whether AI will disrupt jobs. The challenge is whether societies will build the trust, resilience, and equity mechanisms to transform turbulence into abundance.

The Luke–Ryuka Extended HX Framework: The Most Robust Compass Yet

Our research has culminated in the most comprehensive framework ever designed to understand and govern the future of work under AI.

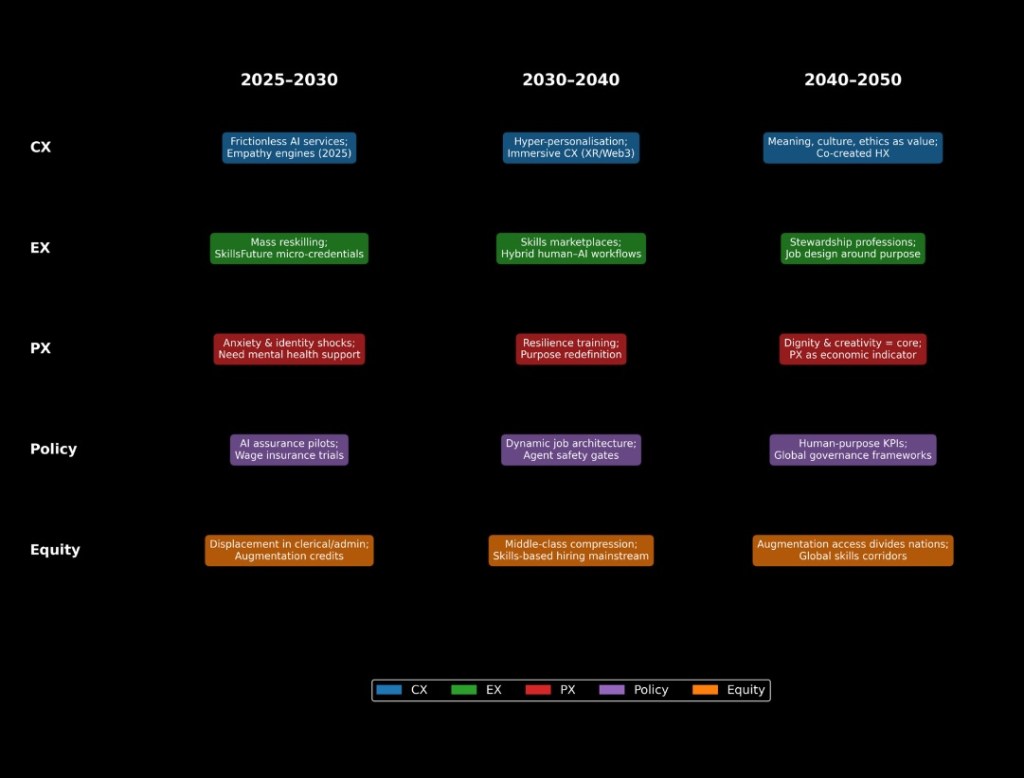

At its core is HX = CX + EX + PX:

CX (Customer Experience): Trust and empathy as differentiators in an AI-frictionless economy. EX (Employee Experience): Skills portfolios, adaptive learning ecosystems, inclusion mechanisms. PX (Psychological Experience): Anxiety, identity, dignity, and human resilience as first-class variables.

Around this triple-layer, we’ve built rails of distributional equity, governance, compute economics, green AI, and labour-market policy, informed by PwC’s Leader’s Guide to Value in Motion , WEF’s Future of Jobs Report (2025) , IEDC’s Labour Market Review , and HAI’s AI Index (2025) .

This framework maps short (2025–2030), mid (2030–2040), and long (2040–2050) term horizons across jobs & skills, distributional impacts, macro outcomes, and HX layers. It is evidence-tethered, policy-relevant, and human-centred.

Case in Point: Singapore’s Law and Tax Professions

Let us test the framework against Singapore’s law and tax sectors, two domains where trust, compliance, and intellectual rigour have historically insulated jobs.

Short Term (2025–2030):

Jobs & Skills: AI copilots now draft contracts, conduct discovery, and automate compliance reports. In tax, generative AI models prepare filings, detect anomalies, and pre-empt audits. Distributional Impact: Junior associates, paralegals, and clerical tax staff face rapid task erosion. Women, minorities, and younger professionals — disproportionately represented in junior roles — are most exposed. EX/PX: Rising anxiety in law graduates (“Will my degree still matter?”). Urgent need for reskilling into AI auditability, ethical reasoning, and client relationship management.

Mid Term (2030–2040):

Jobs & Skills: Law firms pivot from case preparation to AI oversight and legal strategy design. Tax professionals evolve into policy interpreters and cross-border compliance stewards. Distributional Impact: The middle layer of practice shrinks; only top strategists and AI stewards thrive. Without interventions, the middle class in these professions could hollow out. HX: To prevent disillusionment, governments must embed skills adjacencies (law → mediation, tax → ESG reporting) and support worker identity shifts.

Long Term (2040–2050):

Jobs & Skills: Law and tax become hybrid human–AI stewardship professions. Humans govern AI reasoning, ethical interpretation, and international arbitration. Macro Outcome: Nations that invest early in PX safeguards, trust systems, and augmentation access will dominate global legal and tax arbitration markets.

Singapore, with its SkillsFuture, Workforce Singapore, and AI Verify initiatives, is already positioned to lead — but only if it makes psychological resilience and adjacency-based reskilling as central as digital skills.

Irrefutable Imperatives for Humanity

Anchor Policy in HX (CX + EX + PX): Human dignity, resilience, and inclusion must be policy design variables, not afterthoughts. Design Adjacency Pathways: Every job at risk must have two clear “adjacent” skill pathways into growth roles, backed by micro-credentialing and portable benefits. Fund Augmentation Equity: Ensure equal access to AI tools, not just training. Without access, the augmentation gap becomes the new inequality. Operationalise Trust: Adopt AI assurance regimes (safety testing, disclosure, audit) as non-negotiables. Singapore’s AI Verify is a model to export. Monitor PX Indicators: Track anxiety, purpose, and resilience alongside unemployment. A nation’s psychological health is now an economic variable. Green the Compute: Tie AI adoption to energy efficiency and emissions intensity. Compute decarbonisation is workforce policy.

The Time is Nigh, The Tine is Now- Why This Matters Now

The next five years (2025–2030) are the break point decade. WEF, HAI, PwC, and IEDC all converge: disruption is unavoidable, but trajectories diverge. If we design for trust, equity, and HX, we create abundance. If not, we accelerate fragmentation.

This is not just about jobs. It is about whether nations can preserve meaning, purpose, and human dignity in an age where AI executes the “what” of work. Governments must decide the “why”.

A Call to Policymakers – and Human Institutions of the World!

The evidence is irrefutable. The Luke–Ryuka HX Framework is not a thought experiment — it is a path forward; the narrow-path survival and renewal.

Singapore has already shown that foresight, skills, and trust can be fused into policy. The world must now scale this thinking — or risk sleepwalking into a future where jobs vanish, trust collapses, and meaning erodes.

The choice is stark: abundance with dignity, or turbulence without end.

💡 If you are a policymaker reading this, the question is not whether you should act — but how fast you can move to embed HX into your national strategy.

Delta Insights (2025–2050 Master Trajectories)

Since publishing the original piece, we have translated the urgency into granular trajectories for six professions (Legal, Tax, Nursing, Manufacturing, Customer Support, and Software/Data).

Each role now has a year-by-year skill progression, marked with Transition Pathways: Augmentation (2025–2030) → AI copilots and task automation. Hybrid Mastery (2031–2040) → shared cognition with agentic AI. Machine Mastery (2041–2050) → embodied and autonomous AI systems dominate tasks; humans anchor purpose, ethics, and creativity. Skills are tagged as Emerging or Critical by Year to enable MOM/PSD/SSG to phase funding and interventions. The heatmaps make disruption visible: a Legal Associate’s proficiency in AI Application climbs from Level 2 in 2025 to Level 5 by 2038—well before Machine Mastery.

Heatmap to embed: Legal Associate – Skill Trajectory (2025–2050, Trust-Based Scenario). Why? It’s intuitive for policymakers: shows a profession already being reshaped by AI drafting tools, then sharply rising into Hybrid/Mastery. The dashed lines at 2030 and 2040 clearly mark transition points (Augmentation → Hybrid → Mastery).

Leave a comment