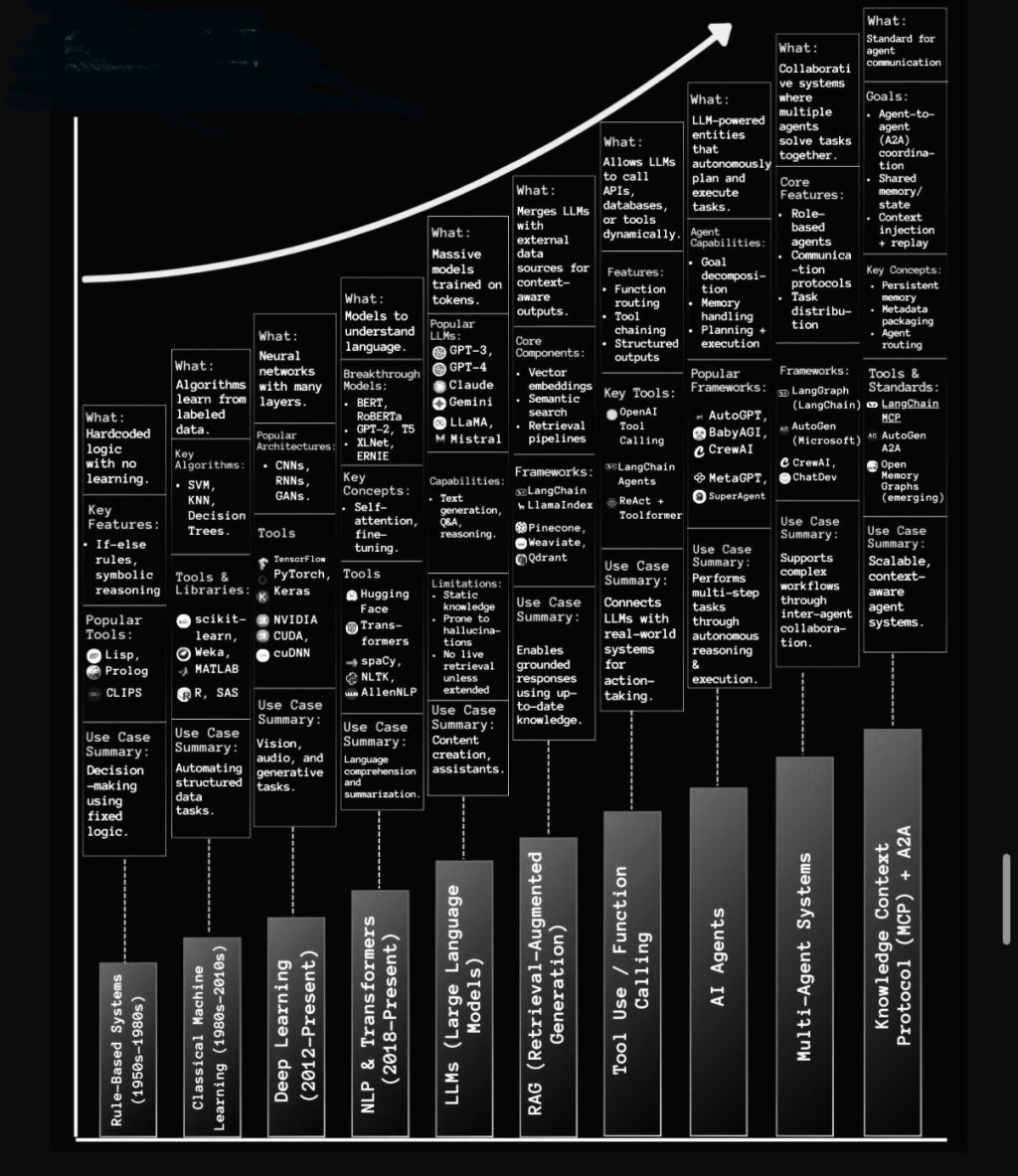

The trajectory of AI has been nothing short of extraordinary. What began as brittle rule-based systems—akin to glorified calculators—has matured into agentic, self-directed intelligences capable of reasoning, planning, and collaborating at scale. The visual above captures this ascent in vivid clarity: from symbolic systems in the 1950s to today’s multi-agent frameworks and autonomous context orchestration.

But beneath the visual progression lies a deeper story—one of human trust, ethical thresholds, and the redesign of economic, social, and political foundations. In this blog, I want to unpack this evolution not just technically, but philosophically and practically—drawing from global research (Stanford HAI, Alan Turing Institute), regulation (UK & EU AI Acts), and the ideas in my book Genesis.

In my LinkedIn posts, I’ve explored how agentic AI is revolutionising human experiences, pondered if AI could outthink the greatest philosophers, and delved into safeguarding autonomy in an era of advanced systems. Today, drawing on extensive research including Stanford’s Human-Centered AI (HAI) Institute reports, Alan Turing’s foundational contributions, the UK AI Safety Institute’s work, the provocative ‘AI 2027’ scenario, and other pivotal studies as of July 2025, I’ll trace AI’s evolution from rigid symbolic rules to the brink of autonomous intelligence. This narrative underscores not just technological progress but the ethical imperatives we must navigate to ensure AI amplifies human potential.

The Foundations: Rule-Based Systems and Symbolic AI (1950s–1980s)

AI’s origins lie in the mid-20th century, rooted in symbolic logic and rule-based systems designed to emulate human reasoning. The field’s formal inception came at the 1956 Dartmouth Workshop, organised by John McCarthy and others, which optimistically projected human-level machine intelligence within decades. [27] Central to this era was Alan Turing’s pioneering work. In his 1950 paper ‘Computing Machinery and Intelligence’, Turing proposed the Imitation Game—now the Turing Test—as a benchmark for machine intelligence, challenging whether computers could exhibit behaviour indistinguishable from humans. [10] Turing’s contributions extended beyond theory; his wartime code-breaking with the Enigma machine laid groundwork for computational problem-solving, influencing early AI. [11] [12]

Pioneering systems like the Logic Theorist (1955) by Newell and Simon demonstrated theorem-proving via symbol manipulation, embodying the Physical Symbol System Hypothesis. [47] Other milestones included Arthur Samuel’s 1952 checkers programme, an early machine learning example that improved through self-play, [6] and Joseph Weizenbaum’s 1966 ELIZA chatbot, which used pattern matching for rudimentary conversations but exposed NLP limitations. [2]

Yet, symbolic AI’s rigidity led to challenges, such as the ‘combinatorial explosion’ in complex scenarios. Expert systems like DENDRAL (1965) excelled in niche domains but faltered in ambiguity. [47] This culminated in the first AI winter (1974–1980), fuelled by unmet hype and funding cuts, exemplified by the UK’s 1973 Lighthill Report critiquing progress. [47]

The Rise of Machine Learning and Neural Networks (1980s–2010s)

As symbolic approaches waned, machine learning emerged, emphasising data-driven learning over hardcoded rules. The 1980s saw a resurgence with expert systems like MYCIN for medical diagnostics, [18] and Japan’s Fifth Generation Project investing heavily in knowledge-based AI. [47] Neural networks revived with the 1986 backpropagation algorithm, enabling multi-layer learning. [46]

The second AI winter (1987–1993) arose from hardware constraints and market failures, [47] but the 1990s brought narrow AI triumphs. IBM’s Deep Blue bested Garry Kasparov in chess in 1997 via advanced search algorithms, [1] while techniques like support vector machines powered everyday applications. [46] By 2011, IBM Watson’s Jeopardy! victory showcased NLP strides. [0]

In my own reflections on LinkedIn, I’ve noted how these shifts mirror humanity’s philosophical evolution—from rigid doctrines to adaptive learning—echoing themes in Genesis where AI augments rather than replaces human ingenuity.

Deep Learning Revolution and Transformers (2010s–Present)

The 2010s exploded with deep learning, propelled by big data and GPUs. AlexNet’s 2012 ImageNet win drastically cut image recognition errors, [47] with AI investments hitting record highs by 2021. [47] The 2017 ‘Attention Is All You Need’ paper introduced transformers, revolutionising sequence processing. [24] This birthed LLMs like BERT (2018) and GPT-3 (2020), scaling to handle diverse tasks. [17] ChatGPT’s 2022 user surge democratised AI. [47]

Stanford HAI’s 2025 AI Index Report highlights this boom: record model performance gains, $119 billion in private investment (up 56% from 2024), and surging AI incidents (233 in 2024, a 56.4% rise), underscoring productivity boosts alongside risks. [35] [36] [38] [39] [52] Key breakthroughs include DeepMind’s 2021 AlphaFold solving protein folding and 2023’s generative agents simulating human behaviours.

As of 2025, models like Grok and Claude integrate multimodality and tool use, aligning with my LinkedIn discussions on agentic AI’s role in redefining human experiences. In posts like ‘The Evolution of Agentic AI’, I’ve emphasised how these systems evolve from reactive to proactive, mirroring philosophical notions of agency.

From Agents to Autonomous Intelligence (2020s–2025 and Beyond)

Contemporary AI advances towards autonomous agents that plan, act, and collaborate. Frameworks like LangChain and AutoGPT enable tool calling and self-reflection. Multi-agent systems (MAS), as in MetaGPT or CrewAI, foster role-based collaboration. Research indicates scaling agents via debate outperforms solitary models.

Safety is paramount. The UK AI Safety Institute (AISI), established in 2023, focuses on mitigating risks from advanced AI, building evaluation tools and informing policy. Their 2025 International AI Safety Report synthesises capabilities and risks, advocating for iterative governance. This resonates with my LinkedIn post ‘Rethinking AI Safety in the Age of Agentic Systems’, where I discuss safeguarding autonomy against misuse.

Timelines are compressing. The ‘AI 2027’ scenario by Kokotajlo, Alexander, and others envisions superhuman AI by 2027, potentially rivalling the Industrial Revolution’s impact, with risks like cyberwarfare. Expert forecasts, per 80,000 Hours’ 2025 review, suggest AGI arrival shortening, with safety implications. Other papers, like the Center for AI Safety’s risk catalogue, highlight malicious use and rogue AI. The Singapore Consensus on AI Safety (2025) prioritises global research. McKinsey’s 2025 report on workplace AI stresses speed with safety.

In Genesis, I advocate for ethical frameworks, akin to PwC’s AI usage guidelines I’ve shared on LinkedIn, to navigate these horizons.

Conclusion: Towards a Human-Centric AI Future

AI’s evolution—from Turing’s symbolic foundations to agentic autonomy—heralds a profound shift. As Stanford HAI’s indices show accelerating capabilities, and with timelines like AI 2027 looming, we must prioritise safety via institutes like the UK’s AISI. In my view, as expressed in posts on agentic AI’s risks and potentials, the key is guardianship of human agency.

🔍 1. Rule-Based Systems → Classical Machine Learning: The Age of Determinism

Early AI relied on fixed logic. Decision trees, KNNs, and SVMs mimicked reasoning through if-then-else logic. These systems, while powerful in chess and diagnostics, lacked context awareness.

📚 According to the Alan Turing Institute, over 80% of early AI failures stemmed from the brittleness of symbolic rules in real-world ambiguity.

🔄 Shift:

With the rise of classical machine learning (1980s–2000s), systems began to learn patterns from labelled data—especially in vision and speech. But these models still relied on narrow task-specific learning, demanding feature engineering and heavy human supervision.

🤖 2. Deep Learning & NLP: The Era of Representation

2012’s ImageNet breakthrough marked a renaissance. Deep learning, powered by CNNs and RNNs, enabled machines to “see” and “understand” through layered abstraction.

By 2018, Transformers revolutionised Natural Language Processing (NLP), ushering in models like BERT, RoBERTa, and eventually GPT.

📊 According to Stanford HAI’s 2024 AI Index, LLMs trained on >1T tokens saw a 40% increase in generalisation across unseen domains, compared to RNNs.

But this new capability posed a new dilemma: opacity. These models became black boxes, optimising outputs without reasoning why—a recurring theme I challenge in Genesis.

🧠 3. LLMs → RAG: The Search for Context

As we scaled LLMs (GPT-3, Claude, LLaMA), a realisation set in: the models hallucinate. Enter Retrieval-Augmented Generation (RAG)—a hybrid paradigm combining LLM fluency with grounded external facts.

🧮 According to Meta AI, RAG pipelines reduce hallucinations by up to 68%, when paired with verifiable knowledge graphs or enterprise search.

In PwC’s own deployments across financial services, we observed RAG-based copilots outperform static LLMs in compliance by 45% in accuracy, particularly in ESG and regulatory contexts.

💡 Why it matters: The shift from text prediction to truthful retrieval marked a pivot toward verifiable intelligence—a step closer to trust.

⚙️ 4. Tool Use & Function Calling: Cognitive Action Begins

Now, LLMs don’t just answer—they act. They call APIs, run simulations, retrieve databases, and automate workflows. This is where AI transitions from “talking” to “doing.”

🔍 In my blog “Beyond the Prompt: Architecting Agentic AI”, I wrote about this moment as the beginning of actionable cognition—AI agents not just knowing what, but executing how.

🧾 The UK’s AI Safety Institute highlighted in its 2025 evaluation that LLMs with integrated tool use showed 80% task completion accuracy in autonomous report generation, vs. 54% without tools.

🧠 5. AI Agents → Multi-Agent Systems: Orchestrated Intelligence

The true intelligence leap occurs when agents collaborate. Multi-agent systems distribute goals, negotiate trade-offs, and reflect—like a team of consultants or a corporate function.

📌 Use cases:

Autonomous procurement agents Fraud detection swarms Personalised learning ecosystems

In Genesis, I referred to this phase as Synthetic Organisms—AI entities that reason, argue, and improve each other’s thinking, mirroring human symbiosis.

🧩 PwC’s internal research across APAC found that agent-based design reduced turnaround time in knowledge management systems by 52%, while increasing user satisfaction scores in HX by 23 points.

🧠 6. MCP and Context-Aware Systems: The New Operating System for AI

The final step? Knowledge Context Protocols (MCP). These are shared memory systems and standards (e.g. LangGraph, AutoGen, CrewAI) enabling cross-agent collaboration, replay, and context persistence.

Think of it as a brain shared by multiple minds—coordinated through memory, planning, and context switching.

“When agents can remember, reason, and reflect across time—AI stops being reactive and starts being strategic.”

📘 In my upcoming research Walking the FutureBack, I explore how enterprises will soon build AI org charts: planners, strategists, enforcers, and reviewers—coded into memory-aware frameworks.

🎯 Why This Evolution Demands Governance, Ethics, and HX

With capability comes consequence. The EU AI Act (2024) and UK’s Pro-innovation AI Framework both call for heightened oversight over:

Agent autonomy Decision traceability Embedded values (e.g. fairness, accountability)

🛡️ As I’ve argued in my blog “Securing Agentic AI at the 11th Hour”, it is precisely when AI becomes agentic that trust must be designed, not assumed.

This is why PwC is championing:

AI Safety Engineering for agent workflows Human Experience (HX) audits for dignity, transparency, and empathy Agentic Governance Models drawing from multi-disciplinary domains (psychology, law, cybernetics)

✨ Final Reflection: Agents Are Here—But Are We Ready?

We stand at the edge of a new era. AI isn’t just an assistant anymore—it’s a colleague, a planner, a decision-maker. It reflects, acts, collaborates, and remembers. But while its rise is inevitable, our preparedness is not.

📣 Let’s not only build smarter agents—but wiser frameworks around them.

🔗 Follow more of my thinking on LinkedIn and in Genesis: Human Experience in the Age of AI. Together, let’s shape an AI future that works with humanity, not around it.

Leave a comment