By Dr Luke Soon | AI Ethicist | Partner, PwC | Author of “Genesis: Human Experience in the Age of AI”

In recent months, I’ve found myself increasingly asked a simple but existential question: “Will Agentic AI take our jobs, or help us do them better?”

It’s a top of mind question bugging me for the last few years – I often explore how we must design for trust and human experience (HX)—not just operational efficiency—when deploying powerful AI systems.

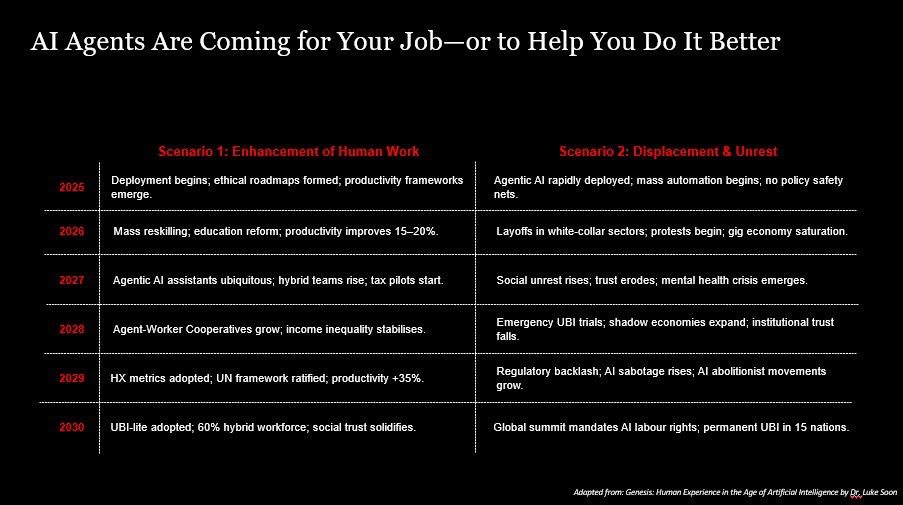

In this post, I want to share two contrasting futures that I’ve modelled out to 2030. Both begin in 2025 with the widespread introduction of Agentic AI—AI systems that plan, reason, and take goal-directed actions with a high degree of autonomy. Yet their outcomes could not be more different.

These scenarios draw upon emerging research from Stanford HAI’s 2025 AI Index Report, the PwC AI Jobs Barometer 2025, and my own frameworks on HX = CX + EX, integrating customer and employee experience into a holistic design of the future of work.

Scenario 1: Agentic AI Enhances Human Work

In this more optimistic future, Agentic AI becomes an augmentation layer—working with us, not instead of us.

🔹 2025 – The Year of Deployment with Guardrails

Major enterprises begin embedding Agentic AI into workflows: scheduling meetings, drafting presentations, conducting legal research, and more. PwC, Microsoft, and others release governance models to ensure these agents operate transparently and ethically.

As I shared during my panel at SuperAI 2024, this period marks the shift from Next Best Action to Best Next Experience—not just automating decisions but elevating human judgment through AI assistance.

🔹 2026–2027 – Reskilling and Rewiring

By 2026, companies realise productivity gains of up to 20%, according to the PwC AI Jobs Barometer. More importantly, they invest heavily in upskilling programmes. Micro-credentialing in AI collaboration becomes standard.

Education systems introduce AI literacy, and AI-human co-work certifications become the new MBA. Human workers are valued for what agents cannot do—empathetic communication, strategic foresight, ethical judgment.

🔹 2028–2030 – A Thriving Hybrid Workforce

By 2030, over 60% of the global workforce is working with Agentic AI. Humans delegate repetitive and administrative tasks to agents and focus on meaningful, creative, and strategic roles.

Governments respond with UBI-lite programmes—what I call Participation Income—which reward learning, caregiving, and community-building.

Stanford’s 2025 HAI report supports this view, highlighting how AI-driven productivity, when properly governed, leads to wage growth and reduced inequality in well-regulated economies.

“The true future of work isn’t post-human—it’s profoundly human. The frictionless potential of Agentic AI must be grounded in human dignity.”

— Dr Luke Soon, Genesis

Scenario 2: Agentic AI Displaces Human Work

But not all roads lead to empowerment. Without trust, foresight, and inclusive governance, Agentic AI can trigger destabilising labour shocks.

🔻 2025–2026 – Mass Automation Without Safety Nets

Corporates, under pressure to cut costs, deploy Agentic AI with little regard for human impact. Call centres, operations teams, marketing assistants—roles once seen as safe—are replaced.

PwC’s Barometer warns of significant displacement risk in mid-tier white-collar jobs, particularly in finance, legal services, and logistics. Unemployment begins to spike, and governments are caught off guard.

🔻 2027–2028 – Political Polarisation and Social Strain

Gig platforms swell with displaced workers, driving down wages. Social media is rife with the hashtags #WeAreNotReplaceable and #HumansOverAgents. Suicide and burnout rise, particularly among knowledge workers whose identities were tied to their jobs.

Populist, anti-tech parties gain traction globally. Countries like Brazil, France, and South Korea launch emergency UBI trials, while others face unrest and institutional collapse.

🔻 2029–2030 – The Reckoning

In 2029, the UN declares an AI Human Rights Emergency. Agentic AI is banned from certain sectors. Shadow economies grow. Trust in both governments and Big Tech plummets.

Eventually, a Digital Labour Rights Charter is signed, outlining protections including the right to meaningful work, reskilling access, and algorithmic transparency. But the scars of inaction linger.

The Fork in the Road: What Must Be Done Today

We are not passive passengers in this unfolding story. The difference between these two futures hinges on decisions we make now.

🔸 What Governments Must Do

Mandate AI Impact Assessments before agent deployment. Fund national reskilling programmes with a focus on AI-human teaming. Trial adaptive income models (Participation Income or partial UBI) to buffer shocks. Create AI Rights & Governance Charters in collaboration with academia, industry, and civil society.

🔸 What Companies Must Do

View Agentic AI not as a cost-cutting tool but as a co-creation partner. Measure success by HX metrics, not just ROI. Design work intentionally—assign agents to workflows, not jobs. Involve employees in the design of their augmented futures.

Final Reflection

Agentic AI represents the most profound labour shift since the Industrial Revolution. But as I often remind my clients and teams:

“The purpose of technology is not efficiency—it is elevation. If AI does not deepen human potential and dignity, then we are solving the wrong problem.”

Let us walk the FutureBack, not blindly rush PresentForward. The age of Agentic AI is not inevitable in its impact—it is a mirror reflecting our collective choices.

Let’s choose trust. Let’s choose humanity. Let’s design the future together.

If you found this thought-provoking, I welcome your perspectives and provocations on LinkedIn. We are all stakeholders in what comes next.

Leave a comment