By Luke Soon

AI Ethicist | HX Strategist | Author of “Genesis: Human Experience in the Age of AI”

⸻

As we move from static question-answering to adaptive, deliberative AI systems, the architecture behind our AI agents matters more than ever. Retrieval-Augmented Generation (RAG), once a niche technique for grounding LLMs in external data, is now mutating into complex, agent-driven, protocol-based intelligence.

In this piece, I explore the shift from Naive RAG to Agentic RAG—and map it against the emerging frameworks of agent orchestration: MCP, A2A, ANP, and ACP. These dual lenses offer a powerful toolkit to frame how we can build, govern, and evolve intelligent, trustworthy systems.

⸻

Part 1: The Evolution of RAG Architectures

- Naive RAG

At its core, Naive RAG is a basic “embed-retrieve-generate” loop. A user query is embedded, matched against a vector store (e.g., MyScaleDB), and the top-k documents are passed into the LLM. There’s no refinement, re-ranking, or awareness—this is retrieval at face value.

Limitations:

• No context refinement

• Prone to semantic drift

• Poor grounding in ambiguous or complex queries

- Advanced RAG

This iteration introduces Query Rewriting and Re-ranking, acting as cognitive preprocessing layers. The system rewrites the query for retrieval alignment and then re-ranks results before passing them to the LLM.

Why this matters:

• Reduces hallucinations

• Improves grounding fidelity

• Mimics early cognition—sense > clarify > decide

- Multi-Modal RAG

Now the LLM sees more than text. Embedding models generate vector representations for both images and text, allowing queries to retrieve context across modalities. This empowers use cases in healthcare, surveillance, or XR industries.

Architecture Highlights:

• Dual-store indexing (image + text)

• Multimodal similarity search

• Multimodal LLMs (e.g., GPT-4o, Gemini) as reasoning engines

- Agentic RAG

This is where things get exciting. The model isn’t just retrieving—it’s deciding how to retrieve, what’s missing, and whether to ask again. The LLM agent rewrites queries, evaluates whether the response is sufficient, and loops until relevance is achieved.

Key Features:

• Dynamic query augmentation

• Agent reasoning checkpoints (e.g., “Do I need more details?”)

• Source strategy (vector DBs vs tools vs Internet)

This is RAG-as-a-thinking-entity, no longer just a smarter search engine but a recursive problem solver.

⸻

Part 2: Agent Orchestration Frameworks—From Tool Users to Collaborators

To handle complex tasks like travel planning, enterprise automation, or medical diagnosis, we need cooperative intelligence—not just isolated LLMs but agentic ecosystems. Here’s how various orchestration models map onto this vision.

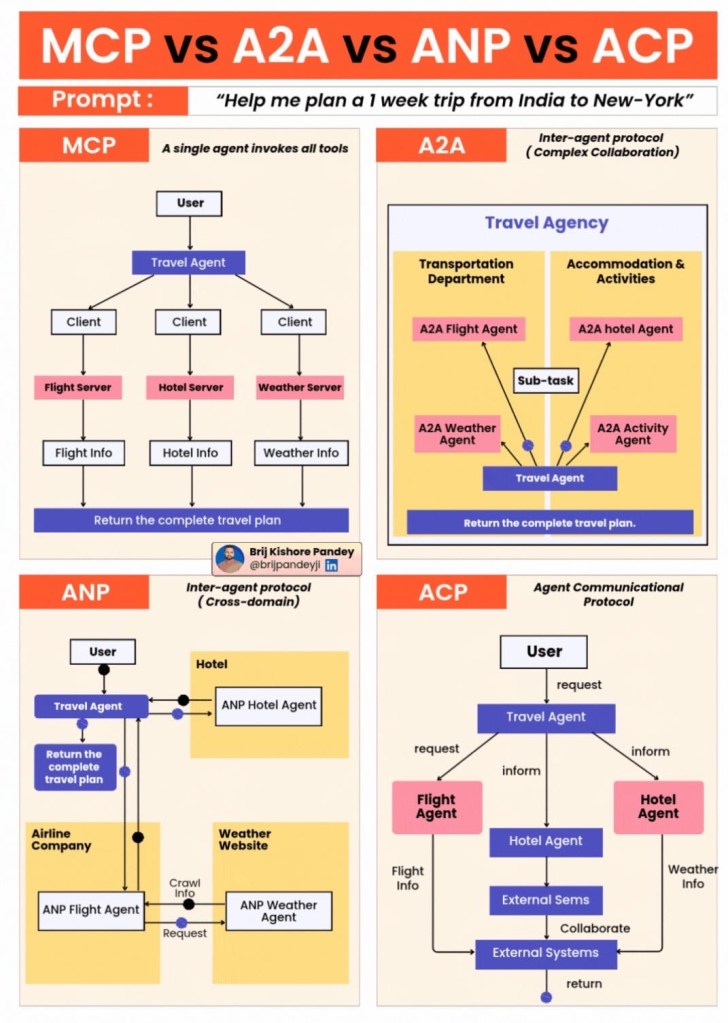

MCP (Monolithic Control Protocol)

A single agent (e.g., a travel agent) controls the entire flow, calling tools or APIs as needed.

Pros: Simplicity.

Cons: No inter-agent negotiation, limited scalability.

A2A (Agent-to-Agent Collaboration)

Think of this as intra-domain collaboration. Sub-agents (flight, hotel, weather) are invoked by the lead agent, and each sub-agent owns part of the task.

Analogy: Like an enterprise with specialised departments.

Strength: Modularisation and task-level delegation.

Use Case: Complex workflows in banking or logistics.

ANP (Agent Network Protocol)

This unlocks cross-domain collaboration. Each sub-agent can invoke other agents or external sources to fulfil its part of the plan. Now agents are autonomous nodes, not just reactive clients.

Implication: Begins to resemble human organisational networks—interdependent, asynchronous, context-aware.

ACP (Agent Communication Protocol)

Here the protocol itself becomes the product. Agents not only collaborate but inform, adapt, and communicate asynchronously—mirroring human consensus-building processes.

Structure:

• Agents “inform” others, not just return results.

• Information exchange becomes bidirectional.

• Ideal for dynamic, multi-turn tasks (e.g., real-time crisis response, multi-market trading).

⸻

Synthesis: What This Means for the Future of HX

We are no longer designing LLM apps—we are designing Agentic Architectures of Thought. Whether through Agentic RAG or ACP-style protocol design, we are embedding intentionality, judgement, and purpose into our AI systems.

As I wrote in Genesis, HX (Human Experience) = CX (Customer) + EX (Employee), but the deeper narrative is:

“Humans are now both the consumers and orchestrators of experience. As AI takes over more frictionless sub-tasks, our role shifts to designing the frameworks that encode our values.”

With Agentic AI, the real work lies in governance, deliberation, and ethical coherence. Because when LLM agents begin to decide what matters, we must ensure that what matters still reflects human-centred intent.

⸻

Closing Thought

The convergence of RAG evolution and multi-agent orchestration is not just a technical upgrade—it’s a paradigm shift. From tool use to protocol governance. From retrieval to reason. From automation to agency.

If you’re building in this space, the question is no longer “What can my LLM do?”

It’s:

“What kind of society am I designing into my AI ecosystem?”

Leave a comment