By Luke Soon | AI Ethicist & HX Strategist

The term AI model is thrown around with reckless abandon these days. But as we move deeper into the era of intelligent systems, it’s clear that the monolithic “AI” is fragmenting into a family of highly specialised models—each architected for distinct tasks, environments, and modalities.

As someone working in AI strategy and human experience (HX), I often find that decoding these models helps organisations better align their digital bets with practical outcomes. So here’s a breakdown of 8 key AI model types—and where each is quietly making a difference in our world.

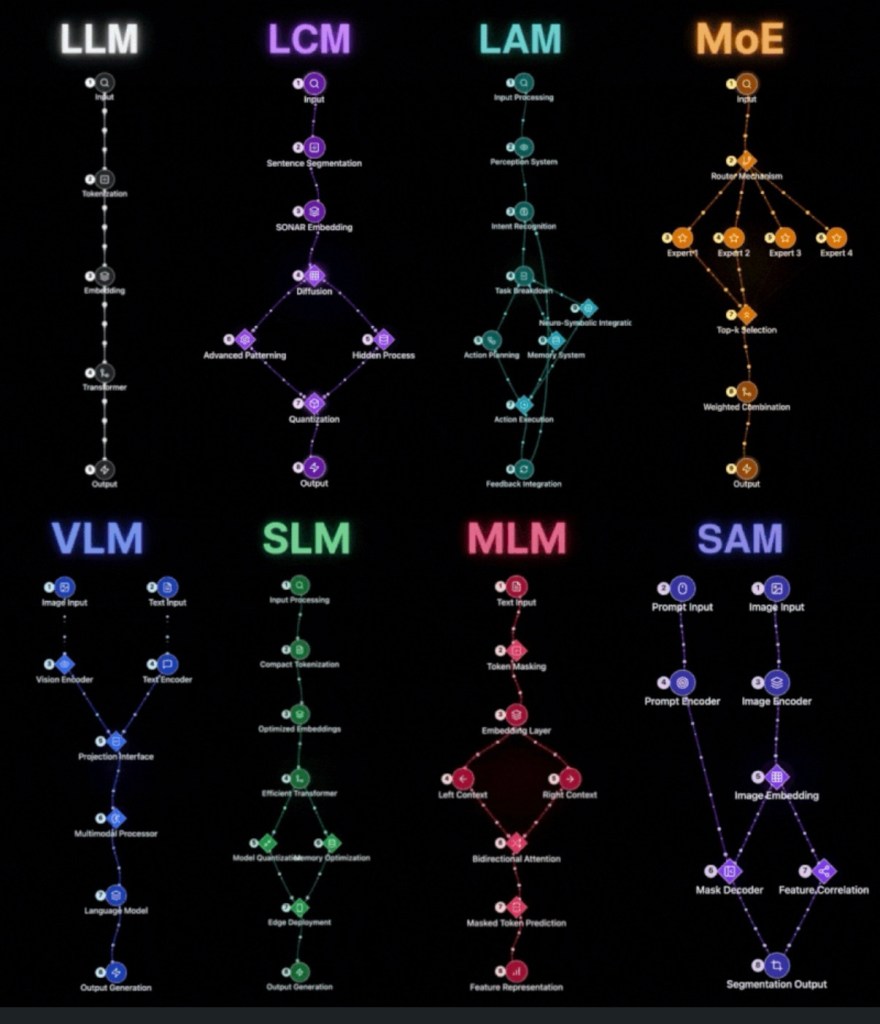

1. LLM (Large Language Model)

The conversationalist and content creator

LLMs are what most people associate with generative AI—language-based, autoregressive models trained on vast text corpora.

Use Case: Customer support chatbots, legal document drafting, code generation, policy simulation.

Example: At a Southeast Asian bank, we deployed an LLM fine-tuned on internal knowledge to assist relationship managers with real-time client briefings.

2. LCM (Latent Consistency Model)

The structured dreamer

LCMs are newer diffusion-based models that map complex inputs (like text prompts) into highly structured outputs—most notably images.

Use Case: Advertising creatives, branded visual generation, fashion and interior concept design.

Example: A luxury retailer uses LCMs to generate bespoke marketing visuals tailored to each campaign narrative, reducing design iteration cycles by 70%.

3. LAM (Large Action Model)

The decision-maker and doer

LAMs go beyond perception—they act. These models are built for agentic workflows, capable of parsing intent, breaking down tasks, and executing them autonomously.

Use Case: Personal AI assistants, enterprise workflow agents, robotics control.

Example: A multinational logistics firm is trialling LAMs to power autonomous warehouse agents that optimise packing sequences in real time.

4. MoE (Mixture of Experts)

The collaborative specialist

Rather than relying on one massive model, MoEs route tasks to different expert networks, activating only what’s needed—saving compute and boosting specialisation.

Use Case: Scalable multi-domain chatbots, hybrid task systems (e.g., finance + legal + tech).

Example: A large insurer is using an MoE architecture to manage underwriting queries, where different “experts” handle regulatory, financial, and actuarial logic.

5. VLM (Vision-Language Model)

The cross-modal interpreter

These are the glue between vision and language—models that understand and generate across both modalities.

Use Case: Medical imaging captioning, document analysis, video question answering.

Example: In healthcare, VLMs are being used to auto-generate radiology summaries from scans, reducing reporting lag and enabling quicker diagnostics.

6. SLM (Small Language Model)

The edge-ready workhorse

SLMs are compact, efficient versions of LLMs—optimised for local inference and on-device deployment.

Use Case: Edge AI for customer kiosks, offline voice assistants, embedded systems.

Example: A telco deployed SLMs in retail kiosks across rural regions, enabling natural language interactions without relying on cloud connectivity.

7. MLM (Masked Language Model)

The quiet learner

MLMs aren’t flashy—they’re pretraining models that learn language structures through self-supervised masking.

Use Case: Base model training, document classification, contextual embeddings.

Example: An edtech platform used MLM-based embeddings to power a semantic search engine for curriculum materials, improving knowledge retrieval for teachers.

8. SAM (Segment Anything Model)

The visual cutter

SAMs turn visual prompts into precise segmentation masks—crucial for anything that requires slicing up visual data.

Use Case: Medical imaging segmentation, autonomous driving, AR object tagging.

Example: A smart farming startup uses SAMs to segment plant health regions in drone images, optimising pesticide and nutrient application zones.

Final Thoughts:

Understanding these specialised AI models isn’t just an academic exercise. It’s how we design more trustworthy, efficient, and human-centric systems. Each model type is a lens—shaping how machines see, act, learn, or create. And increasingly, organisations need a portfolio approach to AI—not a one-model-fits-all mindset.

As always, the goal is not just to build AI—but to build better human experiences (HX) through AI. And that starts with knowing which engine you’re running.

Leave a comment