In a world of autonomous AI ecosystems, every individual will interface with reality through their own AI agents. But not all agents will be equal.

You rightly observe:

“Your chief-of-staff agents — the few closest personal assistants — will coordinate with billions of others to get work done.”

That shifts the paradigm entirely.

1. The Rise of the Personal Agency Stack

Think of a multi-agent operating system — a constellation of agents handling:

Scheduling Legal compliance Financial planning Content creation Research synthesis Relationship management Reputation repair

Your personal Chief-of-Staff Agent orchestrates them. But here’s the rub:

Will everyone’s agent be equally capable? Or will power be determined by the quality, access, and training of your agent stack?

2. Inequality in the Age of AI: A New Hierarchy

In the industrial age, power came from capital.

In the internet age, power came from data.

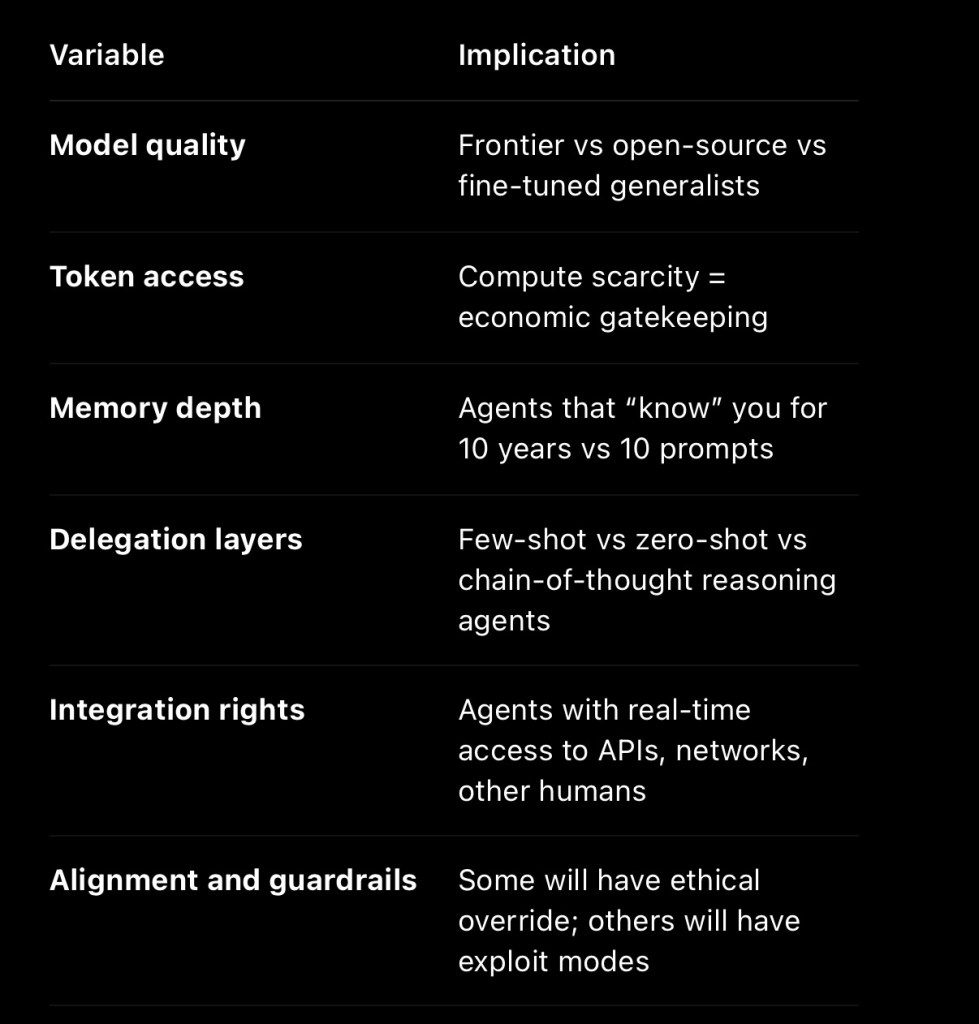

In the AI-agent age, power may come from access to intelligence orchestration — via:

Just as high-frequency traders outpaced the market, “high-frequency thinkers” with elite agents may outpace society.

3. Agent Inequality = Intention Inequality

What happens when:

One person’s agent can simulate entire economies Another person’s agent struggles to process a government form?

This isn’t about search engine gaps. It’s about asymmetries in real-world agency:

Who gets to influence policy, markets, culture? Who gets real-time summaries of every geopolitical event in their context, while others get nothing? Who gets their agent trained on curated private datasets? Who is nudged by manipulative defaults?

Power shifts from institutions to agent ecosystems.

4. Will Power Be Reflected in Token Access?

Yes — and it already is.

Tokens = compute + context window + speed If GPT-8 Turbo requires 10x the tokens to do nuanced, multi-agent strategic planning, and you’re capped at 1k tokens/hour, you’re locked out of complexity.

It’s not hard to imagine a future where:

Corporate execs run persistent, memory-rich sovereign agents Regulators operate on delayed, sandboxed “safe” agents The general public uses token-capped, lobotomised agents

Intelligence and foresight become subscription tiers.

Alignment becomes a feature, not a guarantee.

5. The Ethical Dilemma: Agent Feudalism

If your agent is your voice, your memory, your planner, your filter — then whoever owns or designs your agent architecture shapes your reality.

This is the birth of:

Agent feudalism: Agent lords and agent serfs Cognitive rent-seeking: Pay to think, plan, act Delegated selfhood: Who’s really “you” when your agent negotiates on your behalf?

Provocation:

“Power may not lie in the AI itself — but in who gets to orchestrate the orchestra.”

And that raises the real question:

Should powerful agents be a universal right — like literacy? Or a privileged service — like private banking?

Leave a comment