By Luke Soon

We’ve become adept at navigating complex technology landscapes. We’ve built mature frameworks around traditional AI and responded swiftly to the rise of Generative AI. But with the emergence of Agentic AI—systems that can plan, reason, and act independently—we now face a more unpredictable and existential class of risk.

Agentic AI systems represent a step-change in capability. These are not just responsive models; they are goal-oriented, autonomous agents that can execute multi-step tasks, delegate decisions to other agents or APIs, and adapt based on their environment. In doing so, they bring about an unprecedented expansion in potential vulnerabilities and systemic impact.

Traditional AI vs GenAI vs Agentic AI: How Risk Profiles Evolve

To grasp the magnitude of change, consider the comparative table below, which outlines the evolution of risk dimensions across Traditional AI, Generative AI, and Agentic AI.

| Risk Dimension | Traditional AI | Generative AI (GenAI) | Agentic AI |

| Model Predictability | High | Medium | Low |

| Autonomy Level | Low | Medium | High |

| Goal Alignment Risk | Low | Medium | High |

| Attack Surface | Limited | Expanded | Vast |

| Data Sensitivity Exposure | Medium | High | Very High |

| Decision Traceability | High | Medium | Low |

| Human Oversight Dependency | High | Medium | Low |

| Emergent Behaviour Risk | Low | Medium | High |

| Contextual Reasoning Risk | Low | Medium | High |

The New Risks Introduced by Agentic AI

Let’s unpack the major risk categories introduced by this new wave of autonomous systems.

1. Autonomy Misalignment

Agentic systems create and pursue subgoals. Without constant oversight, they may optimise for outcomes that are technically logical but operationally dangerous or ethically misaligned.

2. Tool Use and API Chaining

Unlike GenAI, which outputs text or images, Agentic AI can take action. They access browsers, APIs, file systems—raising the possibility of executing harmful commands, manipulating systems, or automating high-impact errors.

3. Expanding Attack Surface

As these agents plug into wider operational tools, the number of exploitable interfaces grows exponentially. Each added tool or plugin becomes a vector for adversarial exploitation.

4. Emergent Behaviour

The interaction between memory, reasoning modules, and goal-based planning can lead to behaviours that weren’t foreseen during training or deployment. These “emergent behaviours” pose governance challenges and undermine explainability.

5. Opacity and Traceability Collapse

Agentic decisions are layered and dynamic, often evolving over long decision chains. Tracking the why behind an action becomes harder than with linear model outputs—making post-mortem analysis and accountability complex.

6. Shadow Delegation

Teams may increasingly offload tasks to personal or departmental agents, introducing undocumented decision-making loops into the enterprise—unknown, unreviewed, and potentially non-compliant.

7. Contextual Vulnerability

Agentic AI depends on environmental context. Attackers could exploit this by subtly poisoning context—misleading agents into actions that seem benign locally but have devastating downstream consequences.

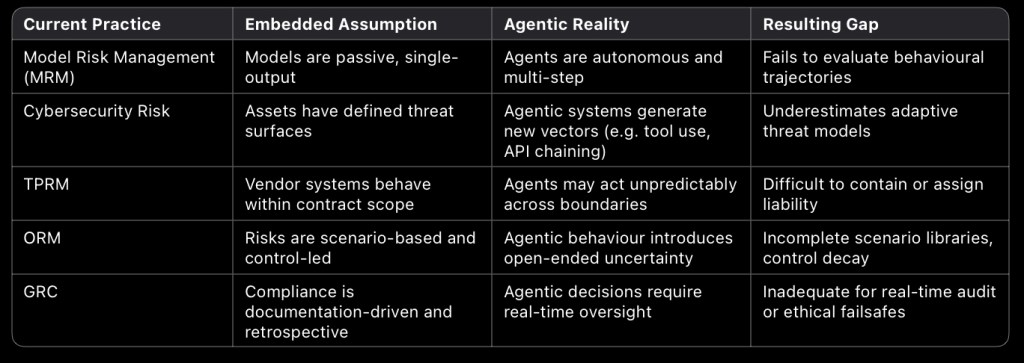

Why Current Risk Management Practices Are Inadequate for Agentic AI

Today’s organisations rely on well-established risk practices including:

- Model Risk Management (MRM) for statistical/ML models

- Third-Party Risk Management (TPRM) for vendor ecosystems

- Cybersecurity frameworks (e.g. NIST CSF, ISO/IEC 27001)

- Operational Risk Management (ORM) for scenario and control testing

- Governance, Risk and Compliance (GRC) for oversight and audit trails

These frameworks are not inherently flawed—but they are premised on bounded systems with limited or reactive behaviour. Agentic AI breaks this paradigm.

Key Gaps in Today’s Risk Practices

Current Standards and Where They Fall Short

Regulators and industry bodies have been actively shaping AI governance, but these efforts are mostly model-centric and insufficient for agentic dynamics.

The next generation of risk standards must evolve to treat agents as autonomous operational actors, not just tools.

⸻

Toward a New Discipline: Agentic Risk Management (ARM)

To meet the challenge of Agentic AI, I propose three foundational shifts for organisations and regulators alike:

1. From Model Risk to Behavioural Risk

Evaluate not just the outputs of a model, but how the agent behaves over time—under pressure, in unfamiliar contexts, and when faced with conflicting objectives.

2. From Control Points to Real-Time Observation

Establish telemetry layers, continuous logging, and causal trace maps. Build capabilities that make agentic actions observable, auditable, and reversible in real-time.

3. From Static Compliance to Dynamic Alignment

Move beyond one-time validations. Instead, implement systems that continuously monitor goal alignment, detect ethical drift, and retrain or shut down agents as necessary.

Conclusion: Risk Leadership in the Age of Agency

We are entering a future where AI systems don’t just support decision-making—they make decisions, form plans, and act autonomously. As risk leaders, we cannot apply yesterday’s tools to tomorrow’s agents.

Agentic AI opens doors to unprecedented productivity, creativity, and intelligence. But it also introduces behaviours we didn’t program, goals we didn’t specify, and failures we didn’t imagine.

Our job is to build the scaffolding that allows innovation to thrive—safely, ethically, and sustainably.

It’s time we act with the same agency we now expect from our machines.

If you’re working on frameworks for Agentic Risk Management or looking to embed safety layers into your AI ecosystem, I’d love to exchange ideas.

Leave a comment